Report

The Enterprise Guide to AI Governance and Risk Resilience

October 30, 2025

TL;DR

- AI adoption is accelerating across enterprises, creating risks that range from operational disruption to reputational harm and regulatory exposure, and demanding structured oversight.

- Breaking down AI risks into categories like cybersecurity, bias, privacy, and compliance helps organizations understand exposures and build tailored governance strategies.

- Regulators worldwide, from the EU to Japan, are setting expectations through binding legislation or guidance, making proactive AI risk management unavoidable for enterprises.

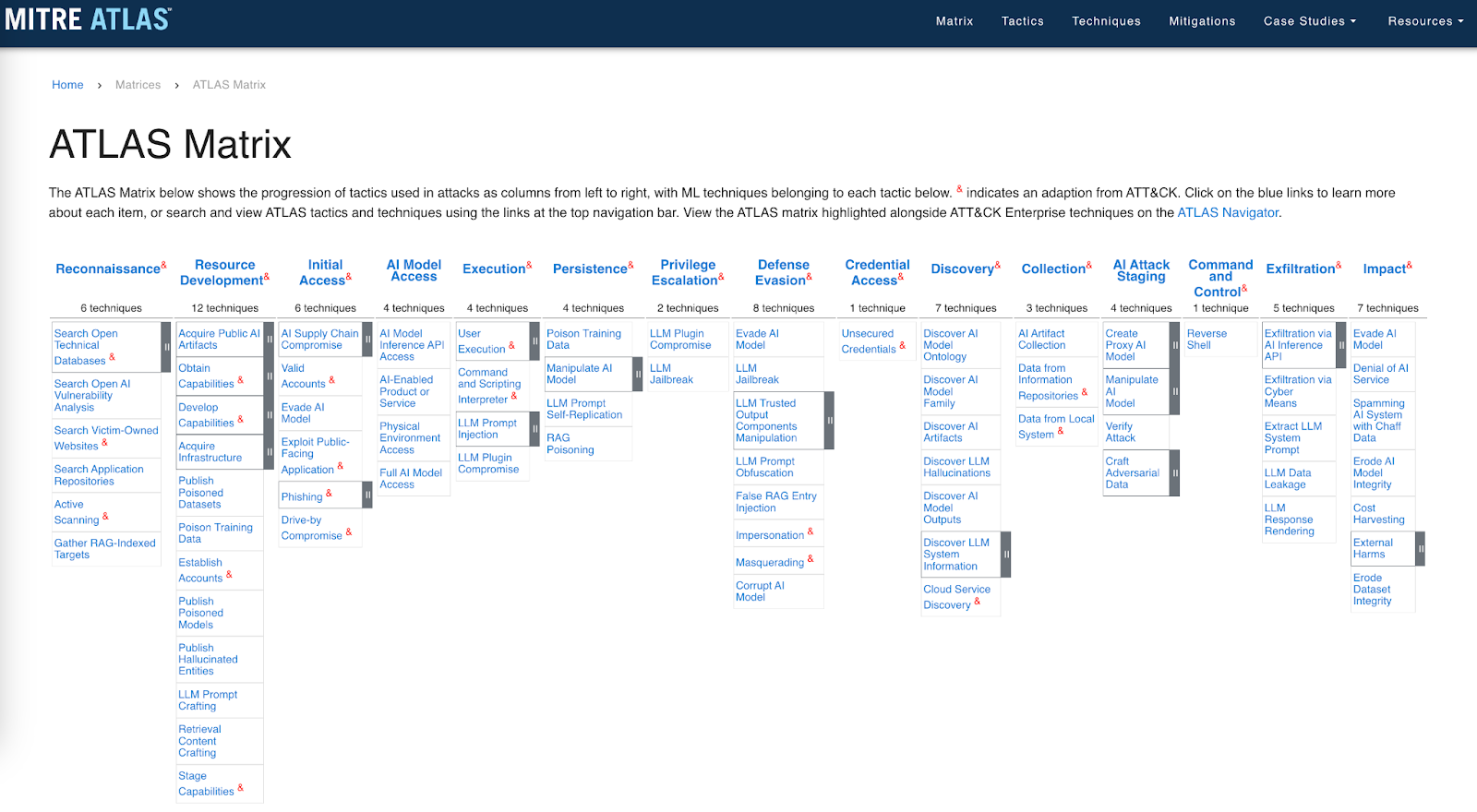

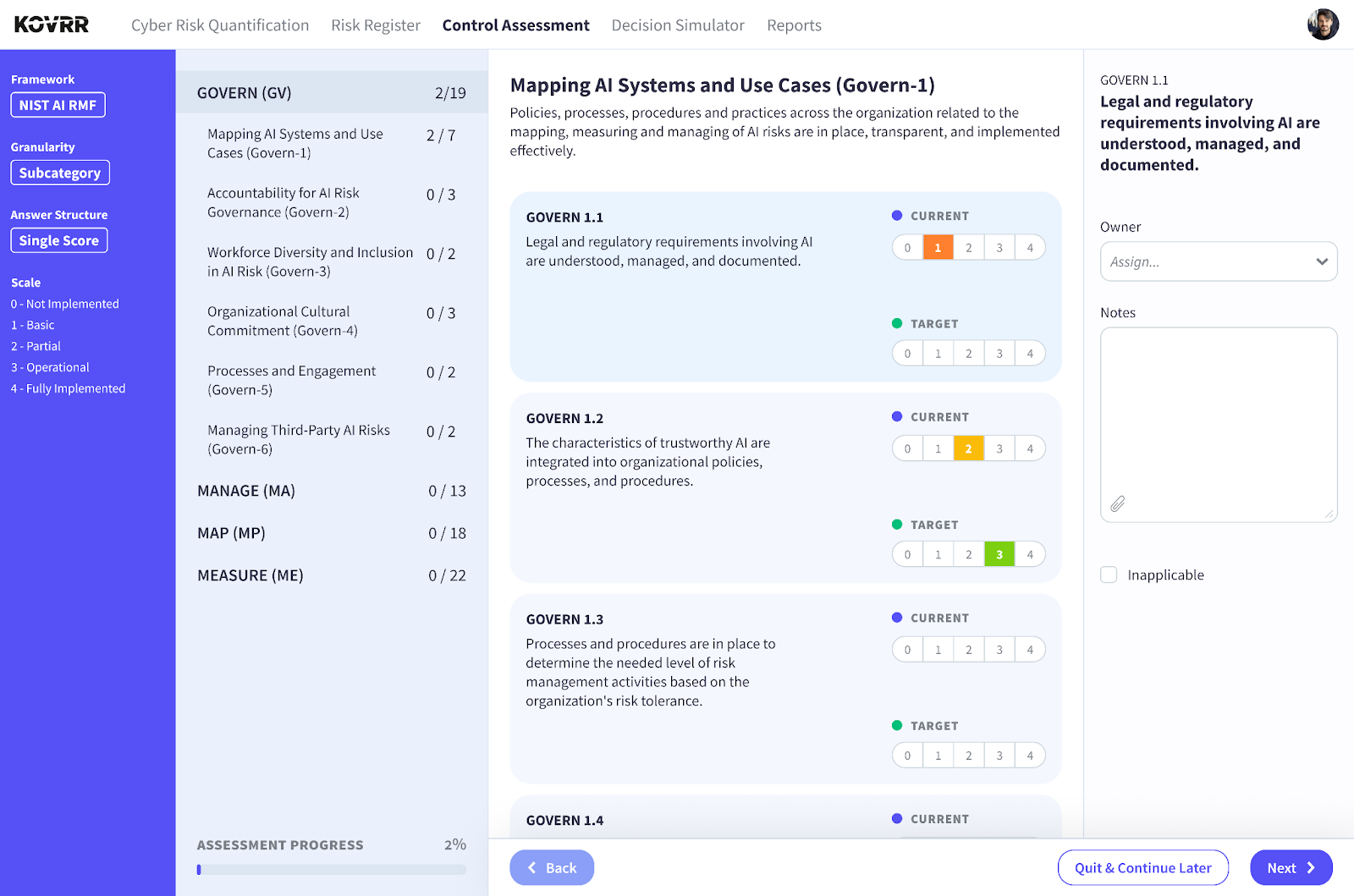

- Frameworks such as NIST AI RMF and ISO 42001 provide structure for governance, while MITRE ATLAS adds adversary-informed insights into real-world threats.

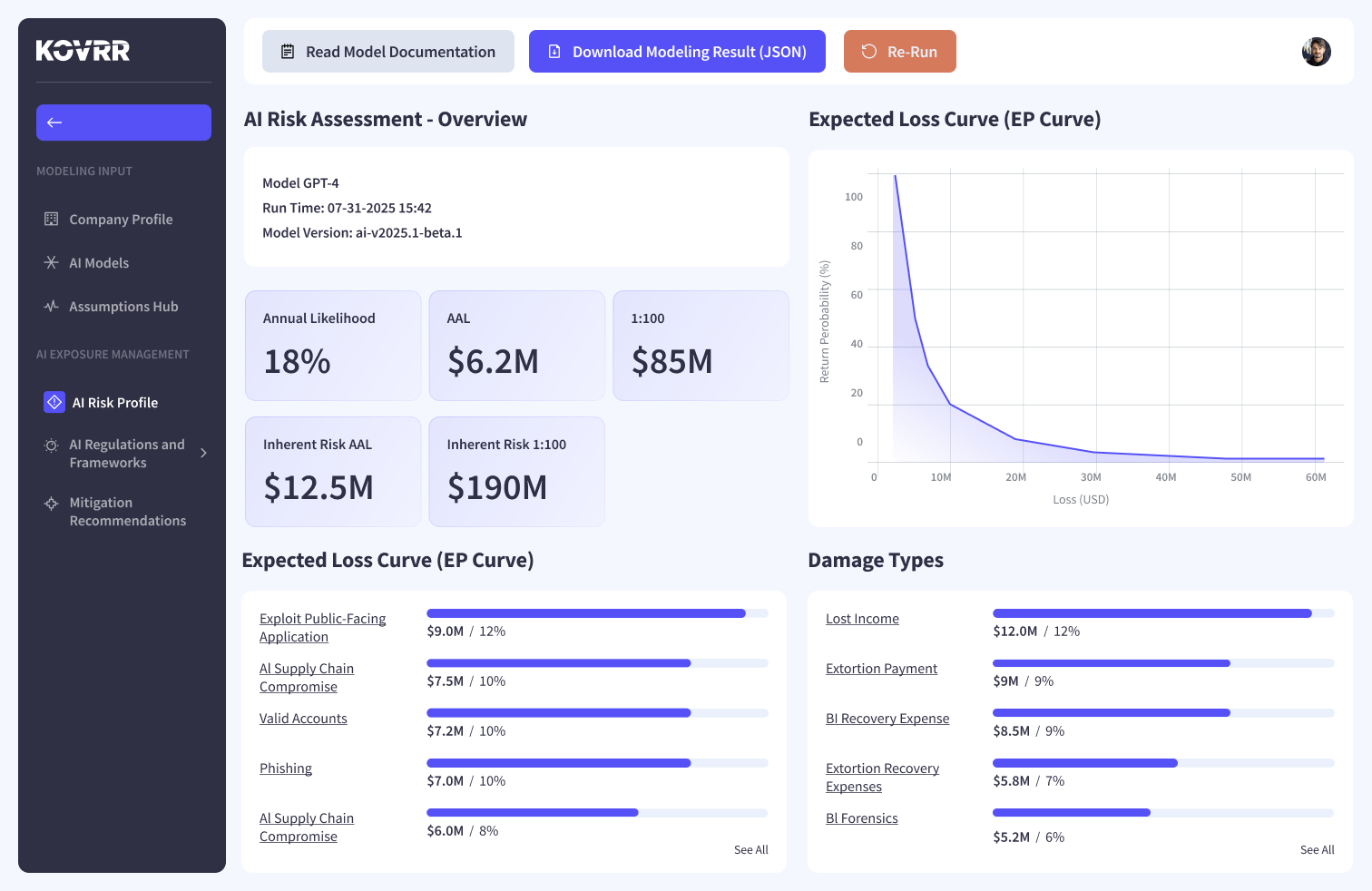

- AI risk assessments provide visibility into safeguard maturity and hidden gaps, and when paired with quantification, they transform findings into measurable financial and operational impact.

- Organizations that build visibility by assessing and quantifying AI risk today will be positioned to meet regulatory mandates, defend decisions, and strengthen resilience as AI evolves.

AI Adoption Outpaces Governance and Controls

Artificial intelligence (AI) usage in the marketplace has rapidly moved from experimental to essential, embedding itself into the daily operations of global organizations across industries, intent on maximizing productivity. Generative AI (GenAI) in particular is accelerating decisions, automating processes, and delivering new levels of output that executives are eager to harness. However, as adoption grows, so too do the associated risks, especially when organizations lack visibility into where and how AI is employed.

AI-related incidents have emerged far faster than many stakeholders anticipated, let alone planned for, and their potential for disruption now rivals, if not surpasses, that of traditional cyber risk. In fact, according to IBM's annual Cost of a Data Breach Report, 97% of organizations that experienced an AI-related security event lacked proper access controls. Moreover, of the 600 total companies surveyed, 63% admitted they had no AI governance policies whatsoever.

This lack of preparedness not only leaves the business vulnerable but also exposes an increasingly interconnected society. As a result, regulators worldwide have jumped into action, creating binding regulations that set the standard for responsible AI governance. The EU, for instance, has already passed the AI Act, while other nations, including the UK, Canada, Australia, and Japan, are moving quickly to impose their own oversight models.

Investors and customers are likewise beginning to demand accountability, making trust in AI perceptibly contingent on demonstrable oversight practices. Given the trajectory, it’s plain that AI governance should no longer be considered an optional, secondary concern for stakeholders. The way these systems and applications are managed, and the visibility leaders have into their use, will determine how resilient organizations are to disruption and whether AI becomes an advantage or a costly liability.

Understanding AI Risk

Enterprise-level AI risk is the potential of GenAI or any other type of artificial intelligence system to cause losses for an organization or its stakeholders, and can originate from various sources, which is why it’s often broken down into distinct types. These AI risk categories build visibility into the nature of each risk, the conditions that make it more likely to occur, and the precise ways it might leave an impact.

To put this in perspective, the U.S. Department of Homeland Security highlights three foundational ways AI can introduce risk,

- The use of AI to enhance, plan, or scale physical or cyberattacks on critical infrastructure.

- Targeted attacks on GenAI systems themselves, especially those supporting critical operations.

- Failures in the design or implementation of AI tools, leading to malfunctions or unintended consequences that disrupt essential services.

Those examples capture the broad risks at a national level, but within organizations, the picture is even more layered. In practice, a single AI-related incident usually spans multiple types at once, compounding the consequences and making the situation even more difficult to manage. Still, by understanding these different types of AI risk, stakeholders gain an added layer of visibility that enables a robust, comprehensive risk management strategy.

AI Risk Type 1: Cybersecurity Risk

Cybersecurity risk in AI is the one stakeholders are generally most familiar with, and refers to the possibility that data or critical systems are compromised through digital means. For instance, attackers may exploit vulnerabilities in public-facing AI applications, thus gaining unauthorized access into the organization and the power to manipulate outputs in ways that erode trust.

Managing this aspect of AI risk involves securing the AI supply chain, maintaining visibility into code and model provenance, and protecting AI systems with the same rigor as other critical assets.

AI Risk Type 2: Operational Risk

Operational risk is the disruption caused by AI-related vulnerabilities that interfere with an organization’s ability to function as intended. AI operational risks can stem from technical faults in models or flawed system integration. Often, these operational problems will arise gradually as AI systems slowly drift away from acceptable performance standards without being detected. Effective management requires actions such as continuous monitoring and, more importantly, documented maintenance accountability.

AI Risk Type 3: Bias & Ethical Risk

Bias and ethical risk in GenAI arise when systems produce outcomes that are misaligned with established workplace and societal standards. Problems can originate from skewed or incomplete training data or disagreements about how AI is applied. When materialized, the impact of this risk factor can be severe, ranging from legal exposure to internal cultural harm and the breakdown of employee trust. Addressing bias and ethical issues demands heavy scrutiny of data sources and incorporating ethical review into every stage of AI development.

AI Risk Type 4: Privacy Risk

Privacy risk in AI concerns the misuse of or unauthorized access to classified information. This mishandling usually occurs when models are trained on data that contains identifiable details and when GenAI tools interact with unsecured data sources. In some cases, even anonymized data can be re-identified through correlation with other datasets, making these risks all the more ominous. Managing the privacy component of AI thus requires enforcing strict data governance and maintaining visibility and control over training and inference data.

AI Risk Type 5: Regulatory & Compliance Risk

Regulatory and compliance risk in AI proliferates when systems or their subsequent usage fail to meet legal or industry requirements. The consequences of non-compliance may include fines or operational restrictions, along with reputational damage that can limit future market opportunities. Mitigating these types of AI risks involves integrating regulatory awareness into AI strategy and making certain that compliance is treated as an ongoing operational priority rather than a one-time requirement.

AI Risk Type 6: Reputational & Business Risk

Reputational and business risk in AI derives from actions or outcomes that weaken the organization’s position in the market. Indeed, public trust can erode quickly if AI-driven decisions are perceived as biased or unsafe, or poorly controlled, and in many situations, the damage extends to long-term brand identity, making recovery costly and slow. Accounting for this risk requires both transparency from businesses regarding how AI is used and an explicit commitment to aligning AI initiatives with internal policies and publicized core values.

AI Risk Type 7: Societal & Existential Risk

Finally, societal and existential risk in AI involves impacts that extend externally. These types of risks include large-scale job displacement, erosion of democratic processes through AI-enabled misinformation, or, at the more extreme end, those science fiction scenarios in which AI poses a direct threat to human survival or the continuity of civilization. Addressing this category of risk calls for industry collaboration and demands that regulators enact legislation that helps to ensure, as much as it can, that AI development aligns with the broader public interest.

The Expanding AI Regulatory Landscape

No longer confined to academic debate and advisory papers, AI oversight has entered a new phase of strategic and tactical importance. Governments across the world have begun defining expectations, some through binding legislation and others via high-level guidance. The specifics may vary by jurisdiction, but there is a growing consensus that AI governance, supported by documented visibility into systems and their lifecycle, is a core component of maintaining market stability and public trust.

European Union: The Artificial Intelligence (AI) Act

The European Union (EU) released the AI Act in August 2024, leading the way as the first major regulator to do so, and giving organizations a two-year window before full enforcement takes effect. The Act begins by stating that the legislation exists to improve and promote the safe usage of AI systems. It then proceeds to make distinctions between the various forms of the technology based on the risk it poses to society, defining categories such as prohibited, which are banned outright, high-risk, limited-risk, and minimal-risk.

High-risk AI systems, discernibly, are subject to the most stringent oversight, with ten dedicated articles detailing the obligations of both providers and users. These provisions cover a wide range of requirements, including AI risk management, data governance, transparency, human oversight, and post-market monitoring. GenAI and other foundational models are addressed specifically in a standalone chapter, which details obligations such as disclosing training data sources and documenting model design inputs.

Notably, the Act elevates governance responsibilities to the boardroom level. Under Article 66, management boards are assigned specific tasks to ensure compliance, embedding AI accountability into the highest tier of the corporate agenda. Should organizations employ any of the prohibited AI, they’ll face a penalty of up to €35 million or 7% of global annual revenue. In comparison, non-compliance with the AI Act's provisions can result in fines of up to €15 million or 3% of global annual revenue.

United States: Fragmented But Intensifying Oversight

Unlike the EU, the US has not yet enacted a comprehensive federal law dedicated to AI governance and risk management. Regulators are instead leveraging existing statutes and agency powers to police market usage. The Federal Trade Commission (FTC), for instance, warned companies that deceptive AI practices, such as writing fake reviews, fall under consumer protection law. Similarly, the Equal Employment Opportunities Commission (EEOC) issued guidance regarding the illegality of using AI for certain hiring practices.

In 2024, US Congress members introduced the Federal Artificial Intelligence Risk Management Act. Although not officially ratified as of 2025, this bipartisan and bicameral bill would require federal agencies and vendors to incorporate NIST’s AI RMF into their operations. Meanwhile, certain states and regions are advancing their own legislative agendas. New York City launched its AI Action Plan in 2023, and Colorado, in 2024, passed the Colorado Artificial Intelligence Act (CAIA), the first comprehensive state law addressing high-risk AI systems.

United Kingdom: A Pro-Innovation Approach

The United Kingdom (UK) opted against a singularly binding piece of AI legislation, establishing its oversight practices through a pro-innovation regulatory approach. The National AI Strategy, released in 2021 and refreshed a year later, set out details of a ten-year vision to position the UK as a global AI superpower and highlighted the importance of investing in the AI ecosystem, ensuring that GenAI will deliver benefits across sectors and be governed responsibly.

Parliament consequently erected the Office for Artificial Intelligence, a dedicated authority nestled under the Department for Science, Innovation, and Technology. Rather than impose broad AI usage restrictions, the UK relies on sector-specific regulators such as the Information Commissioner's Office (ICO) and Financial Conduct Authority (FCA) to enforce the five guiding principles of safety, transparency, fairness, accountability, and contestability, as published in the AI Regulation White Paper.

The UK has also entrenched itself in the international conversation, hosting the AI Safety Summit in November 2023. The Summit brought together global governmental representatives and culminated in the signing of the Bletchley Declaration, the world’s first international agreement acknowledging the catastrophic risks AI could pose through misuse or loss of control, particularly in areas such as cybersecurity, biotechnology, and disinformation.

Canada: High-Impact AI Under Scrutiny

Canada is advancing its AI oversight with the proposed Artificial Intelligence and Data Act (AIDA), which, if ratified, will become the country's first national AI law, focused primarily on systems deemed "high-impact." The legislation requires Canadian-based organizations to identify harmful scenarios that GenAI usage could cause, and then implement mitigation measures. Throughout the usage lifecycle of these high-impact systems, stakeholders would be expected to maintain ongoing monitoring and upkeep.

AIDA also emphasizes transparency, stating that providers must keep detailed documentation on training data and system design, while offering mechanisms for individuals to contest harmful outcomes. Redress is also a key component of the regulation, with enforcement powers granted to the Minister of Innovation, Science, and Industry, which could issue penalties of up to C$25 million or 5% of global annual revenue, whichever is higher. AIDA is among the stricter AI governance laws, despite its narrower focus on high-impact use cases.

Australia: Moving Toward Mandatory Guardrails

Like Canada, Australia is concentrated on high-impact use cases, building a risk-based approach that seeks to establish "mandatory guardrails" against AI risk. Following a 2023 public consultation on safe and responsible AI, the government’s January 2024 interim response concluded that voluntary commitments were insufficient. In September 2024, the Department of Industry, Science, and Resources released a proposal paper outlining preventative obligations across the AI lifecycle for developers and deployers of high-risk systems.

While legislation is still being amended, Australia continues to draw on existing regulations to inform the trajectory of its AI governance model. The nation's privacy regulator, the OAIC, for example, issued guidance for training and deploying generative models, and the eSafety Commissioner has published a position statement on generative AI harms, both of which will be taken into account for the future national law. The 2024–25 federal budget earmarked funding to support responsible AI, reinforcing the policy push even as a national statute remains pending.

Japan: Soft-Law and Human-Centric Principles

Japan’s regulatory model diverges sharply from the EU’s, relying on voluntary standards and existing laws rather than binding requirements. In 2025, parliament approved the AI Promotion Act, its first national framework focused on encouraging development while embedding human-centric concepts such as fairness and accountability. The Act builds on Japan’s earlier AI principles launched in 2019 and its National AI Strategy, and it operationalizes oversight through voluntary AI Guidelines for Business.

Within the international arena, Japan has acted as a bridge between Western and Asian governance models, launching the G7 Hiroshima AI Process in 2023. The forum produced a voluntary code of conduct for advanced AI systems, with the aim of harmonizing approaches to GenAI deployment across borders. Extending its reach and cementing its position as an AI governance architect, Japan then established the Hiroshima AI Process Friends Group, bringing in dozens of non-G7 countries to promote wider AI adoption and usage alignment.

Frameworks for AI Governance and Risk Management

Even as national and regional authorities propel their regulatory approaches to AI governance and oversight forward, coverage remains disparate, with many requirements still in flux. This uncertainty, however, has not deterred organizations from acting. On the contrary, many stakeholders recognize that establishing governance and risk management mechanisms for GenAI is not only prudent preparation for any upcoming regulatory changes but also a strategic necessity. Enterprises that address AI proactively secure their competitive advantage more firmly than those that delay.

But because AI risk is still so new, there lies a great challenge in determining which safeguards to apply and how to embed them effectively. As a result, GRC and security leaders are increasingly employing management frameworks that distill high-level concepts into operational practice. Standards such as NIST’s AI RMF and ISO/IEC 42001 offer reliable methods that teams can use to gain visibility into AI risks and then systematically implement controls across the AI lifecycle. By leveraging such frameworks, enterprises can simultaneously start building resilience and demonstrate alignment with future regulations.

The NIST AI Risk Management Framework

Developed by the US National Institute of Standards and Technology (NIST), widely known for its Cybersecurity Framework (CSF), the AI Risk Management Framework (RMF) has quickly become one of the most referenced guides for responsible AI adoption. After a period of extensive public consultation, the framework was officially released in January 2023 and, today, remains a voluntary resource designed for cross-industry use, helping organizations to gain visibility into, identify, assess, and manage AI-related risks.

The NIST AI RMF, much like the CSF, is structured on a set of core functions. Unlike the CSF, however, which revolves around six pillars, the AI RMF's foundation consists of four, including Govern, Map, Measure, and Manage, each of which comes with categories and subcategories that translate broad risk concepts into actionable steps. NIST's AI standard was specifically designed to be adaptable to different contexts, offering a blueprint that can evolve to keep pace with rapid technological changes.

ISO/IEC 42001: Global AI Management Systems Standard

In late 2023, the International Organization for Standardization (ISO), along with its long-time collaborator, the International Electrotechnical Commission (IEC), published ISO/IEC 42001, laying out specific requirements for "establishing, implementing, maintaining, and continually improving an Artificial Intelligence Management System (AIMS)." This standard is the first certifiable management system worldwide focused exclusively on AI, making it applicable to organizations of any size or sector that engage with GenAI or AI-based products and services.

Like the NIST AI RMF, ISO/IEC 42001 is entirely voluntary. Still, it offers stakeholders a solid benchmark on which to build responsible AI practices and demonstrate adherence to any upcoming laws. Similarly, its fabric mirrors that of other widely adopted ISO standards, such as ISO/IEC 27001, which makes integration into existing governance programs more practical.

Components of the framework include leadership commitment, ongoing performance evaluations, and traceability that improves visibility, while Annex A enumerates controls that can reduce AI exposure.

Mapping Specific AI Threats With MITRE ATLAS

Frameworks such as NIST’s AI RMF and ISO/IEC 42001 provide organizations with the structure to identify and manage AI risks. Yet translating those high-level safeguards into concrete adversary behaviors requires a different lens.

Helping enterprise security and risk managers bridge that gap, the MITRE Corporation published the Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) framework. Much like the MITRE ATT&CK framework has become the standard reference for conventional cyberattacks, the MITRE ATLAS provides visibility into how adversaries exploit GenAI systems in the wild through a detailed map of tactics and techniques.

Specifically, it outlines the tactics and techniques used across the full lifecycle of an AI-related incident, such as gaining initial access through a supply chain compromise or phishing, to persisting by poisoning training data, and ultimately disrupting or exfiltrating critical AI assets. In doing so, ATLAS connects abstract risks such as cybersecurity or operational failure to concrete adversary behavior, offering risk management teams a means of proactively determining how AI-specific incidents might unfold and prioritizing safeguards accordingly.

Why AI Governance and Risk Management Must Be Formalized

AI risk cannot be left to ad hoc controls or informal oversight. As adoption accelerates and shadow AI spreads, organizations need a consistent and defensible process to govern how these systems are deployed and managed. Formal programs make oversight repeatable, keep visibility consistent as usage grows, and create a baseline for advancing toward capabilities such as AI risk assessment and quantification.

AI Risk Assessment: The Foundation of Building Readiness

The first step in managing any business risk, be it AI, cyber, or otherwise, is to systematically discern the organization's current level of exposure, taking into account any of the safeguards and policies already in place to minimize it. An AI risk assessment is the vehicle that provides visibility into how AI is being used across the enterprise and evaluates whether existing controls are sufficient to a degree that aligns with risk appetite.

A comprehensive risk assessment will also review governance structures, data management practices, model monitoring procedures, and incident response readiness. A competent assessor will use the opportunity to uncover situations in which GenAI is being used outside of approved channels, creating a major and potentially expensive source of hidden risk.

By mapping strengths and weaknesses, an AI risk assessment gives stakeholders actionable visibility that forms a solid foundation for focused, effective risk reduction plans. In the end, the assessment will be much more than a record of compliance. It will become a defensible account of due diligence and a robust baseline for advancing toward more sophisticated capabilities such as AI risk quantification.

Start evaluating AI exposure today with Kovrr’s AI Risk Assessment.

AI Risk Quantification: From Assessment to Action

An AI risk assessment provides valuable visibility, but it does not measure how control levels affect the likelihood of exploitation or the scale of potential losses. Obtaining these more concrete definitions of exposure requires the next step of quantification. AI risk quantification is the practice of modeling AI-related threats in order to forecast how they are likely to affect the business over a defined period, typically the year ahead.

Step One: Define the Environment

The process begins by establishing the organizational context and ensuring the models ingest defining data such as industry, revenue, regulatory obligations, and how AI models are deployed. These inputs, combined with AI risk assessment outputs, incident records, firmographics, and industry-specific threat intelligence, provide the baseline for quantification.

Step Two: Map AI Model Exposure

This foundation is then used to build a bespoke catalog of potential AI-related events, tailored to the organization’s profile to ensure the scenarios are realistic and relevant. Mapping exposure also requires examining model access, the types of data being handled, reliance on third parties, and the safeguards already in place.

Step Three: Run the Simulations

With the profile established, advanced statistical modeling techniques are then applied, such as the Monte Carlo simulation. The model runs thousands of iterations of the upcoming year, each one representing how AI-related risks could unfold and affect the business under varying conditions.

Step Four: Review the Results

The outputs deepen visibility by distilling these simulations into measurable results, most prominently expressed through a loss exceedance curve (LEC). This curve displays the full range of possible outcomes, from routine incidents to extreme, low-probability events, along with the likelihood of losses exceeding different thresholds. From there, the data can be segmented to provide granular visibility into event drivers and impacts.

Step Five: Prioritize Improvements

The quantified results also surface which of the control safeguards most effectively reduce modeled losses, creating a defensible basis for prioritizing improvements. At this stage, the focus is on distinguishing between the initiatives that deliver measurable business impact and those with marginal effect. Quantification turns these distinctions into a practical direction, giving organizations a clear path for directing resources toward the areas of greatest strategic value.

Schedule a free demo today to learn more about Kovrr’s AI Risk Quantification module.

Building Preparedness for the AI Era

With AI risk quantification, exposure is translated into practical, financial terms that can be incorporated into other decision-making frameworks. Once AI risk ceases to be abstract and gains quantified visibility that can be weighed against investment and governance priorities, AI risk management itself shifts from a narrow control exercise to an integral part of enterprise planning, shaping how the organization prepares for future uncertainty.

Investment Prioritization and ROI

Quantification provides GRC leaders with the ability to objectively evaluate which AI-related risks hold the greatest potential threat to the organization and which safeguards produce the most significant reduction in modeled losses, turning investment planning and resource allocation into a data-driven process. Teams are set up to prioritize initiatives that deliver the highest return in reduced exposure, guided by visibility into which controls drive the greatest modeled impact.

Executive and Board Communication

When AI risk is quantified, it becomes an understandable business risk that resonates at the executive and board level. Rather than discussing control gaps in technical terms or score averages, GRC leaders can present the likelihood of AI risk events occurring, along with the potential cost of defined scenarios. This more familiar language, backed by visibility into likelihood and impact, creates a common ground for discussions, helping to elevate AI risk as a strategic concern and embed it into broader corporate decision-making processes.

Governance and Capital Planning

AI risk quantification helps leadership set risk appetite and tolerance levels that accurately account for the organization's unique exposure. With the modeled distributions of possible loss outcomes, decision-makers can determine whether to strengthen oversight, increase capital reserves, or expand investment in AI risk management programs. In this way, quantification delivers the evidence base for governance choices that balance risk appetite with high-level enterprise aims.

Insurance Optimization

While fully standalone AI insurance policies exist, they are still rare. Most organizations today address AI risk through add-ons to existing policies. In either case, AI risk quantification provides the visibility needed to understand how AI risk exposure interacts with coverage, highlighting potential gaps in terms and conditions and strengthening renewal negotiations. Presenting a quantified view of AI exposure also positions enterprises more favorably with underwriters, supporting better agreements and ensuring policies reflect the real scale of AI-related risk.

The Path Forward for AI Governance and Risk Management

Assessing the unique AI risks an organization faces has become a fundamental component of building resilience and long-term success. The rapid pace of GenAI adoption, paired with mounting regulatory expectations, leaves little room for ad hoc approaches or reactionary strategies. What is required instead is an institutionalized governance path that builds visibility by identifying risks, linking safeguards with recognized frameworks, and advancing toward quantification for optimized planning.

When AI risk assessments uncover maturity gaps and those gaps are quantified into financial and operational terms, risk managers gain visibility and a data-driven foundation for defensible decisions. Investments can be prioritized based on measurable impact, boards and executives can understand exposure in business language, and governance programs can demonstrate accountability to regulators and stakeholders alike.

Those who delay in assessing and quantifying AI risk face compounding exposure and eroding trust at the exact moment when AI capabilities are becoming central to market competitiveness. The organizations that start taking action now will be best positioned not only to comply with new mandates but to withstand unexpected incidents and capitalize on AI with confidence.

Begin the process of strengthening AI resilience by exploring Kovrr’s AI Risk module. Schedule a free demo today.

.webp)

.jpg)

.jpg)