Instantly Assess Third-Party AI Risk Scores for Free

AI risk often enters the organization through third-party vendors, yet insight into those systems is still driven by point-in-time questionnaires that quickly go stale. This free assessment lets you instantly assess the AI risk of third-party GenAI systems using continuously updated risk intelligence.

How the Third-Party AI Risk Assessment Works

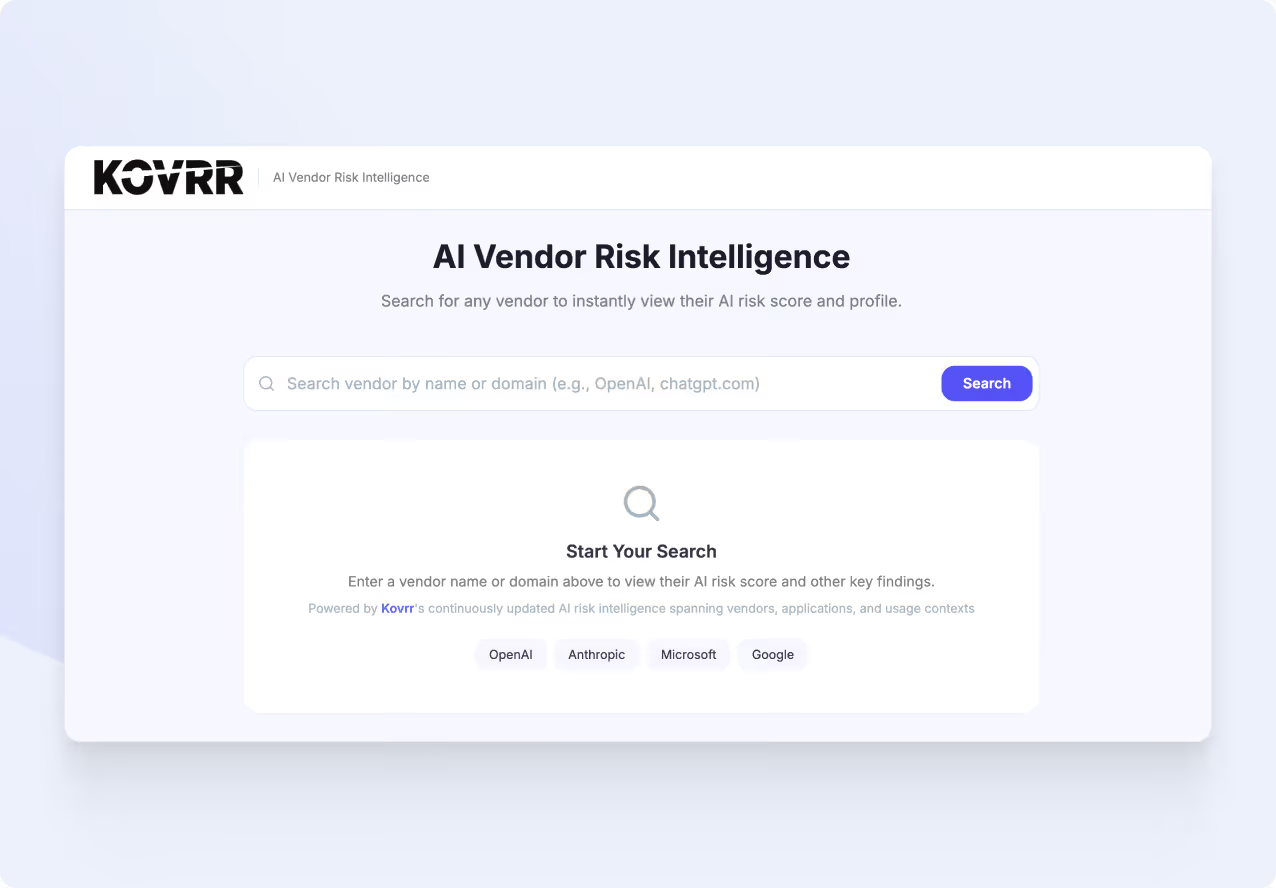

Enter the name of a GenAI system or vendor, such as ChatGPT, and instantly receive a vendor AI risk report. The third-party risk assessment surfaces current risk signals based on Kovrr’s internal risk models and intelligence sources, providing a point-in-time view that’s far more informative than static questionnaires. The final report is designed to support discussion and decision-making, not to serve as a certification or compliance ruling.

What the Third-Party AI Risk Assessment Includes

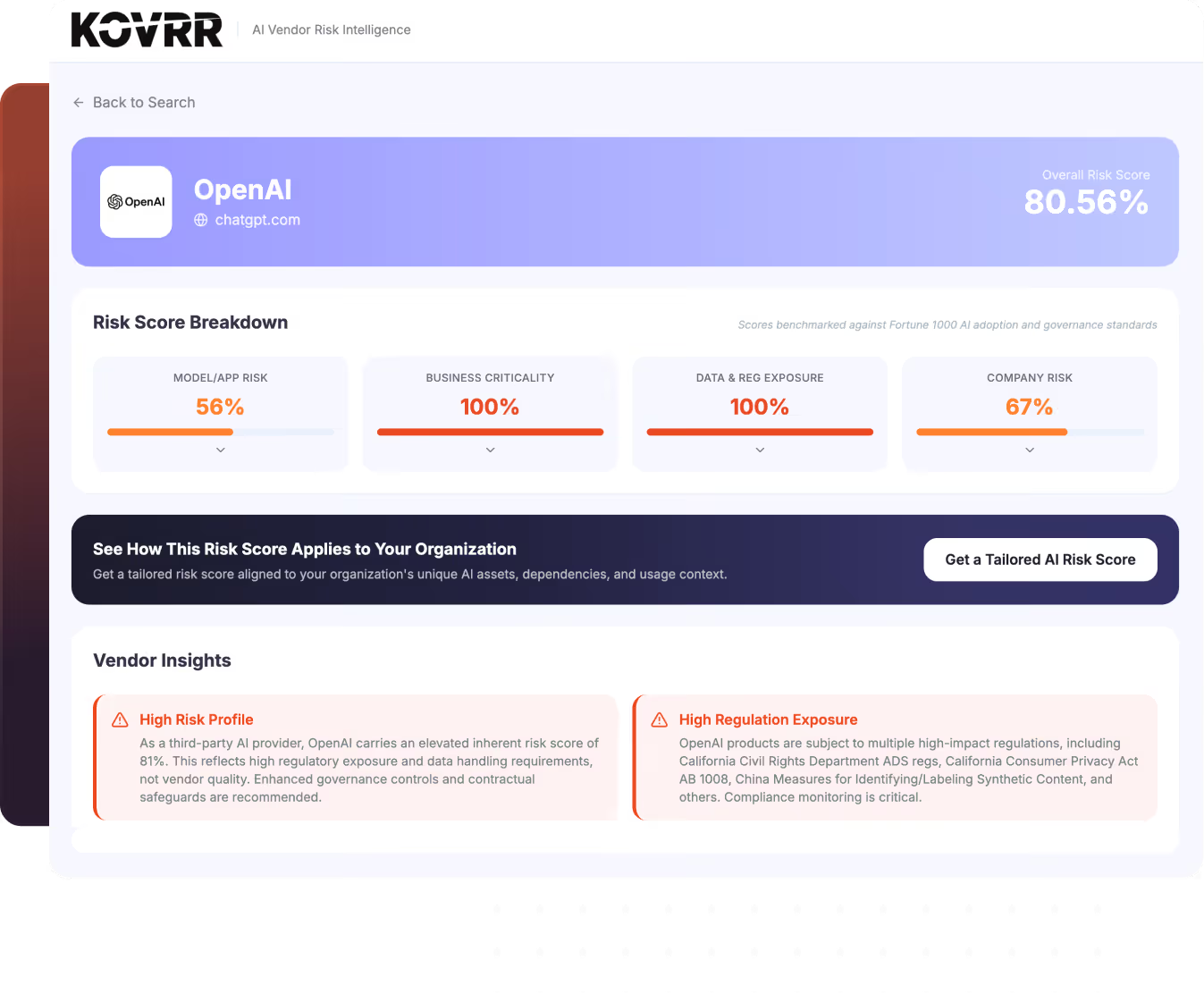

Each third-party AI risk assessment score opens with a concise, actionable summary of risk signals tied to the specific vendor you searched.

The entire report includes:

Third-party identity and report timestamp

An overall AI risk score

A clear risk level indicator

A breakdown across core AI risk dimensions

Audit-ready documentation for compliance processes

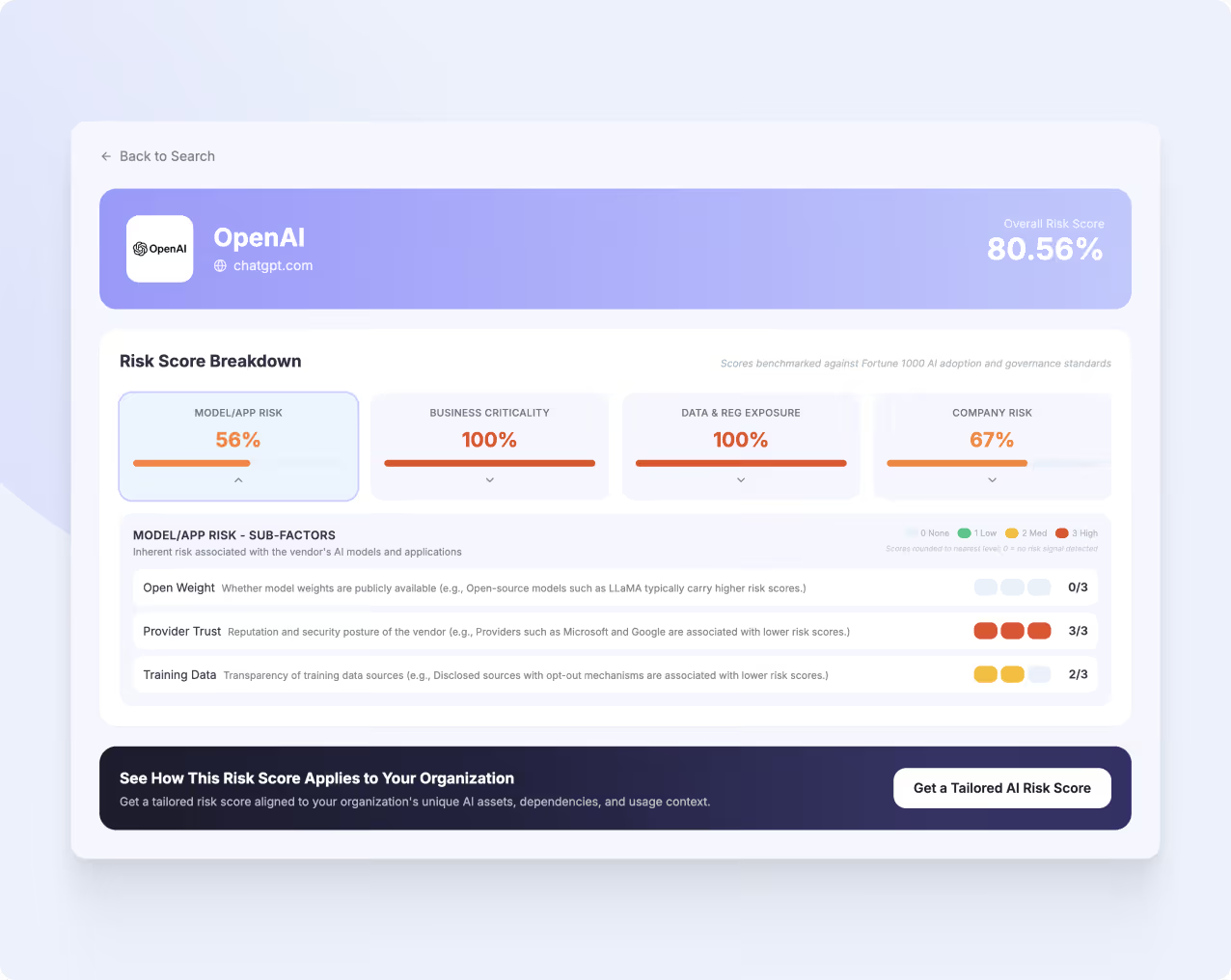

Understanding What Drives the AI Risk Score

The assessment does not treat risk as a single number. It shows how different factors contribute to overall exposure, so you can see how risk accumulates across areas such as:

Model and application characteristics

Business reliance on the system

Regulatory exposure based on jurisdiction and use

Company-level risk signals

Implementation and usage considerations

This breakdown helps explain why a third-party GenAI system carries risk, not just that it does, making the score easier to interpret and discuss.

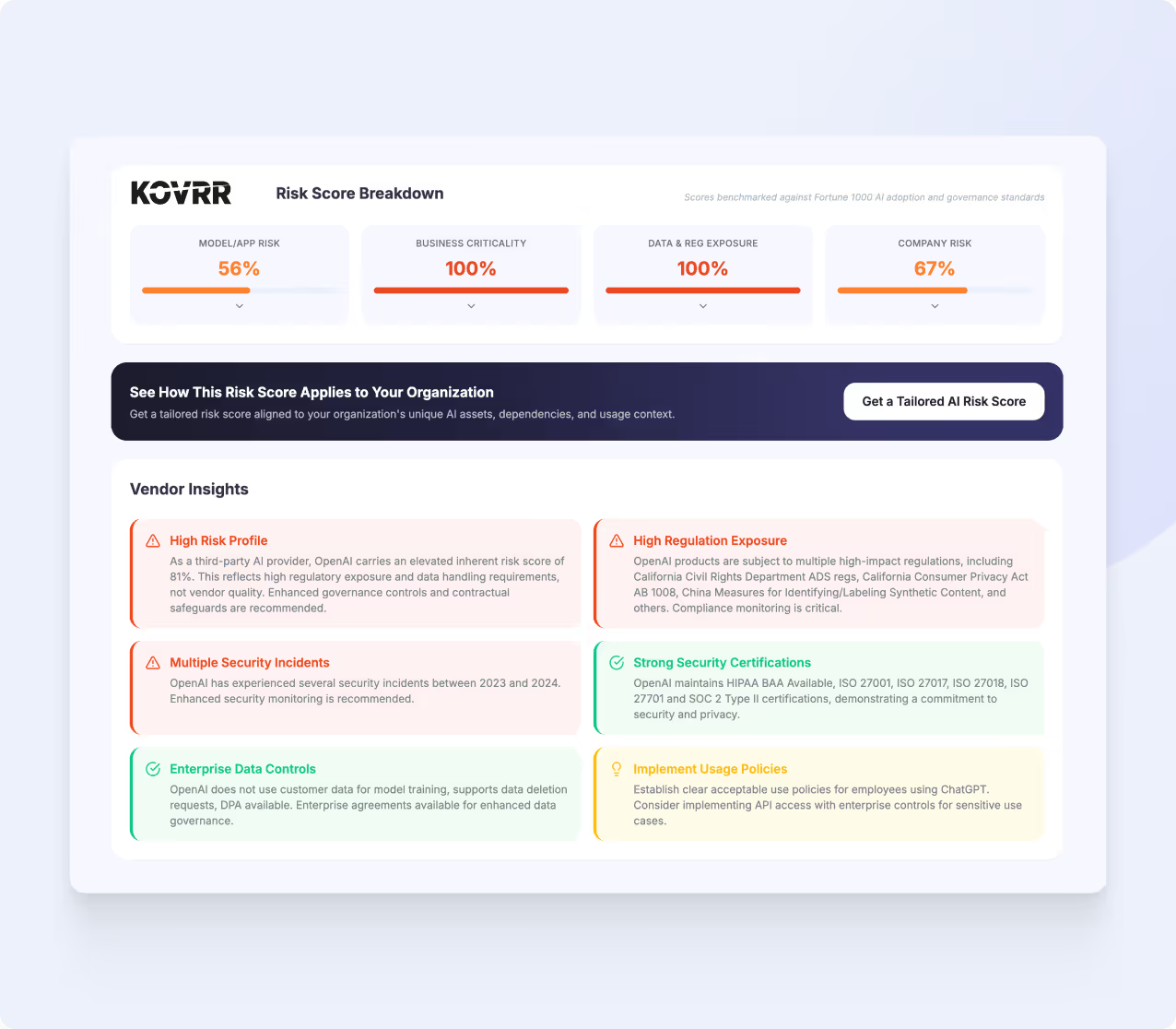

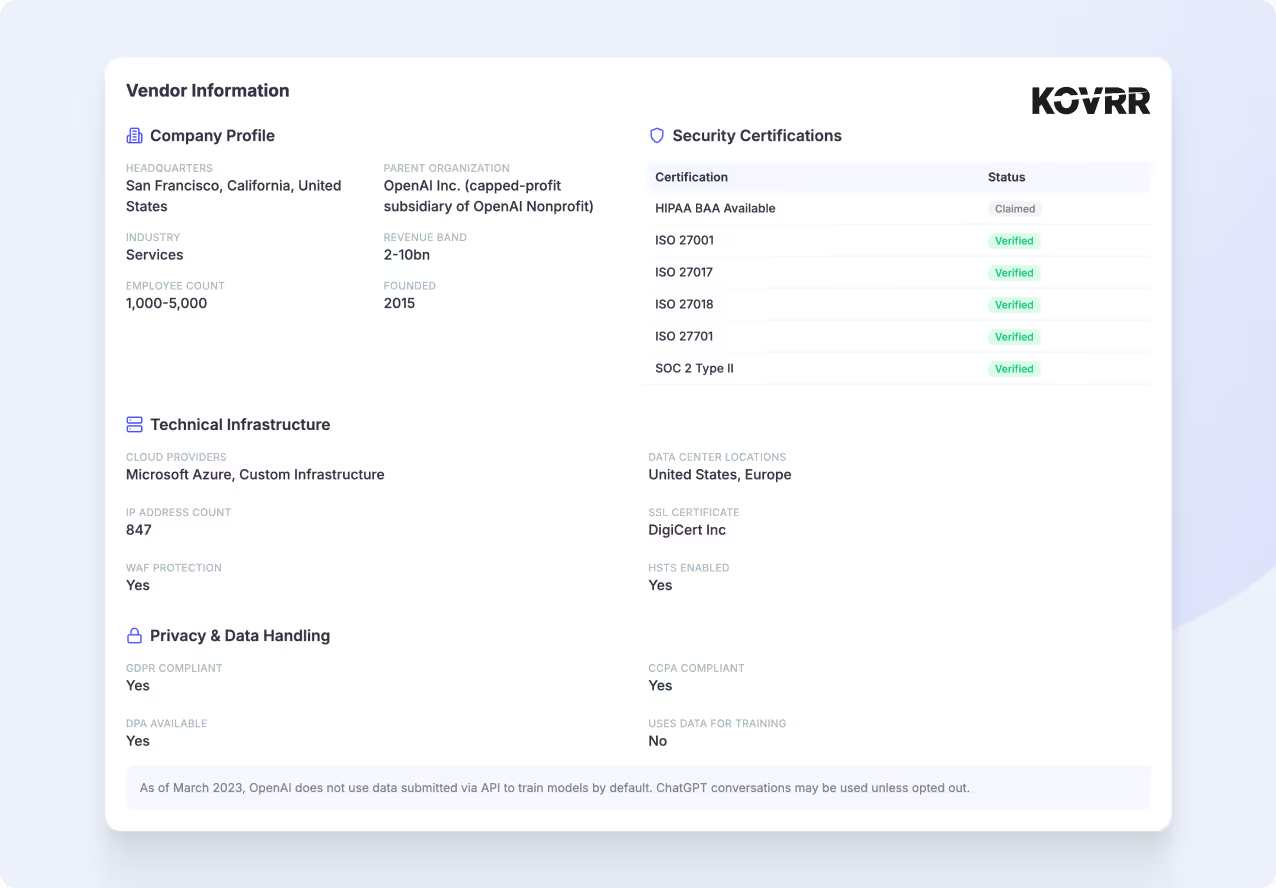

Signals That Shape the Third-Party AI Risk Picture

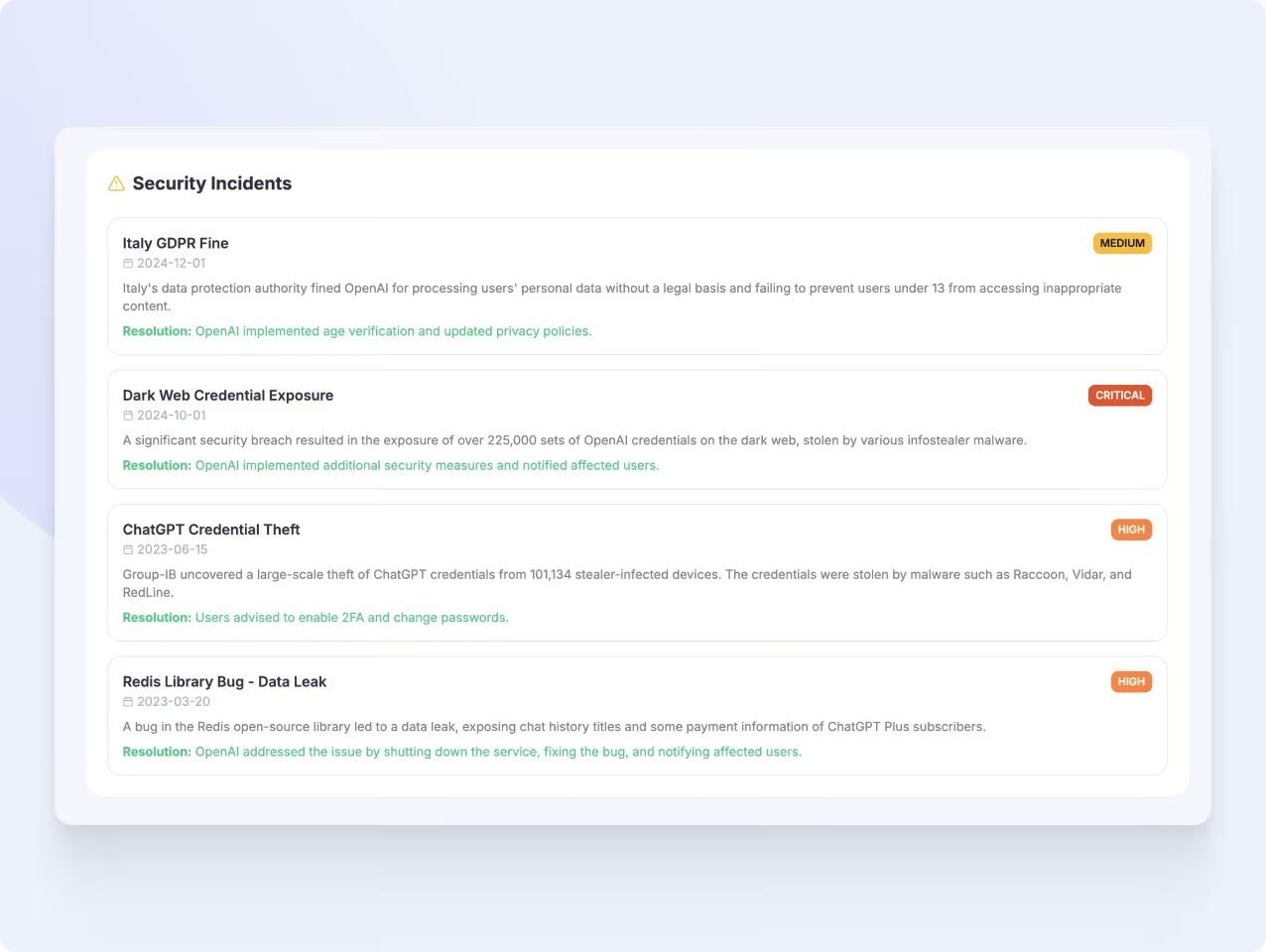

Beyond scoring, the assessment highlights notable findings that shape the third-party's AI risk profile. These details may include:

Security certifications and governance signals

Infrastructure and deployment patterns

Known incidents or areas requiring attention

Regulatory considerations tied to AI use

This context turns raw data into something that AI risk management and AI governance teams can discuss and act on.

Why Instant Third-Party AI Risk Visibility Matters

Third-party AI systems introduce exposure in ways traditional vendor assessments were not built to capture. Model behavior, training practices, deployment models, and regulatory obligations all affect risk, yet these factors change faster than point-in-time reviews can reflect. Without asset-level, current visibility, organizations struggle to justify approvals or explain decisions once scrutiny increases. This report shows what becomes visible when third-party AI applications are evaluated as assets rather than abstractions.

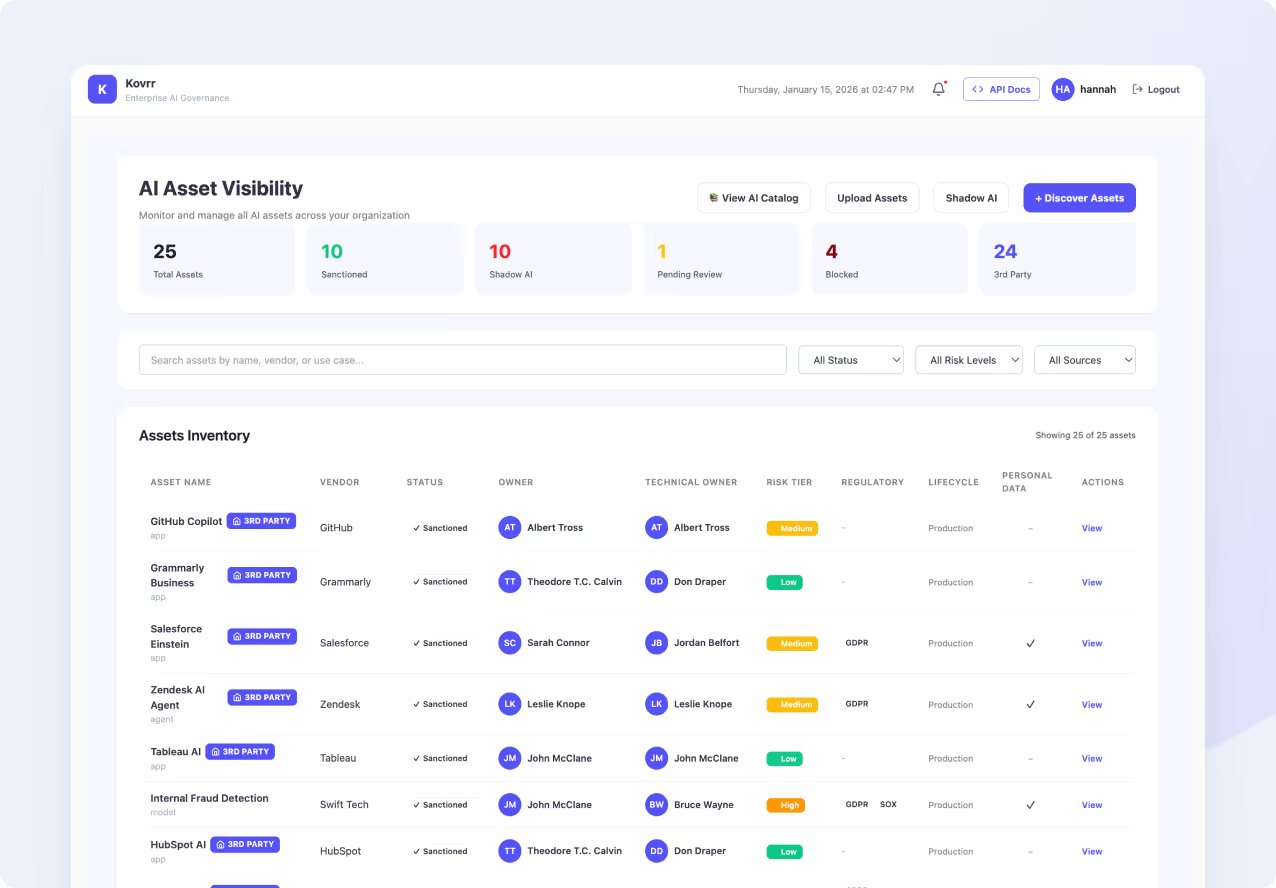

From Generalized Scores to Full Third-Party AI Asset Visibility

This experience is intentionally scoped to provide fast, targeted insight without setup or integrations. Kovrr’s AI Asset Visibility module extends this approach across your organization by mapping all AI systems in use, including third-party tools, embedded AI, and internal deployments. What begins here as a single assessment can scale into continuous visibility across your entire AI footprint.

Transform Third-Party AI Risk Into Actionable Metrics

Start with the free third-party AI risk score assessment to understand the risk a third-party system introduces and why asset-level visibility matters before decisions are made.