Blog Post

Ensuring Institutional AI Ownership With the AI Compliance Officer

February 4, 2026

TL;DR

- AI systems are already embedded across enterprise operations, creating real compliance obligations that can no longer be managed informally.

- Existing compliance models struggle with AI because regulatory expectations now demand explainability, accountability, and continuous oversight.

- Forward-looking organizations are addressing this gap by formalizing ownership through an AI Compliance Officer responsible for operationalizing AI compliance.

- AI asset visibility is essential, as shadow AI and undocumented usage undermine accountability and limit the effectiveness of compliance programs and assessments.

- Structured, repeatable AI compliance assessments help organizations translate policy into practice and maintain defensible governance as AI usage becomes visible and evolves.

- Treating AI compliance as a core enterprise function enables organizations to scale governance and avoid reactive retrofitting under regulatory pressure.

The Organizational Need to Formalize AI Compliance

Artificial intelligence (AI) systems and generative AI (GenAI) tools have already been embedded across enterprise operations in a myriad of ways that trigger compliance obligations, both in terms of AI-specific regulations and other reporting mandates. In many cases, this adoption is occurring informally, through employee-driven tools or AI features embedded within third-party platforms, without centralized visibility or approval. Nevertheless, these systems and tools are still influencing core business practices such as hiring decisions, customer interactions, internal productivity workflows, and third-party services, to name a few.

But regardless of how organizations are labeling these solutions, for example, high-risk or low-risk, the fact remains that legal and fiduciary expectations exist. Regulators have made this position increasingly explicit. The EU AI Act, for one, establishes formal requirements around risk management, documentation, governance, and high-level accountability for all classifications of AI tools. Canada's proposed Artificial Intelligence and Data Act (AIDA) takes a similar approach. Although yet to be officially adopted, the legislation introduces robust requirements for oversight and controls around what it defines as high-impact AI systems.

Despite this trend, many organizations are still managing AI compliance indirectly. Privacy teams examine data usage. Legal teams interpret regulatory exposure, and security teams review safeguards. Each of these distinct business functions addresses part of the task, but no one owns the whole, resulting in fragmentation. When these compliance responsibilities remain distributed, accountability weakens. Avoiding this common issue requires coordination rather than a simple policy or mandate. As AI adoption continues and regulatory expectations mature and expand, such a disjointed approach will become increasingly difficult to defend.

Why Existing Compliance Models Are Struggling With AI

Traditional compliance programs were designed to oversee risks that evolved at a predictable, steady pace. Policies could be written, controls could be implemented, and assessments could be conducted on defined and standardized cycles. AI, notably, does not follow that model. On the contrary, AI usage spreads rapidly across business units, often through vendors or employee-driven adoption that occurs outside formal approval and tracking mechanisms. Models change rapidly as updates are introduced, and new use cases emerge far more quickly than governance processes can adapt.

At the same time, regulators are no longer focused solely on data protection and privacy. Expectations have been drastically inflated, now including explainability, fairness, lifecycle oversight, and, critically, human accountability. AI regulations and frameworks such as the EU AI Act, Colorado's Artificial Intelligence Act (CAIA), the UK's National AI Strategy, and other emerging national standards are very explicit about these upgraded requirements. Regulators expect that organizations of all sizes will demonstrate how AI systems are governed and accounted for, not merely that policies exist.

Unfortunately, when AI compliance is treated as an extension of existing compliance programs without dedicated ownership, gaps inevitably appear. Likewise, when reviews are conducted on a one-off basis, they will fail to capture the continuous change that is a fundamental part of AI’s reality. This is especially true when shadow AI tools and other AI systems are adopted informally, creating blind spots that traditional compliance reviews are not designed to detect.

When regulators or auditors question how AI compliance is being managed under these circumstances, answers tend to be incomplete. Ultimately, existing compliance models were not designed for this level of dynamism, and stretching them further maximizes risk.

The Case for a Dedicated AI Compliance Officer

The more forward-looking enterprises are responding to this regulatory shift by implementing AI-specific programs and formalizing ownership. The AI Compliance Officer, for example, has begun to emerge as a dedicated role created to bring consistency and authority to AI compliance efforts that have outgrown informal coordination. Rather than simply being a position of novelty or optimization, this compliance professional is a practical response to what's occurring.

As AI-specific laws and guidance continue to expand across jurisdictions, teams are being asked to manage a growing body of obligations that cut across business units and technologies. The resulting workload cannot feasibly be absorbed indefinitely as a secondary responsibility. Consequently, the AI Compliance Officer exists purely to operationalize AI compliance. By centralizing this responsibility, organizations can ensure that regulatory expectations are met and applied in real processes as opposed to disconnected policies.

What the AI Compliance Officer Is Responsible For

The AI Compliance Officer is in charge of establishing and sustaining a coherent, actionable, organization-wide approach to AI regulatory compliance. This responsibility begins with defining standardized processes for how AI systems and GenAI tools are reviewed, approved, documented, and monitored across their lifecycle. Instead of passively allowing each department or unit to interpret compliance expectations independently, the role creates a consistent operating model that is applicable across the entire enterprise.

Coordination, thus, is central to this mandate. AI compliance spans multiple business fronts, including legal interpretation, data governance, security controls, procurement, and operational use. It's important to note that the AI Compliance Officer does not replace the professionals responsible for these processes, but rather ensures their efforts are aligned and sequenced in a way that meets evolving regulatory expectations. The function needs to clarify who is responsible for specific controls and when issues must be escalated.

The role also owns the compliance record. Regulators now expect organizations to demonstrate how AI governance decisions are made and why. The AI Compliance Officer, therefore, must compile documentation across time, making sure that processes are defensible and showing how AI systems are used rather than how they may have been initially designed or implemented. By serving as an overt point of accountability, the role reduces ambiguity during reviews and inquiries. Likewise, questions about AI usage will no longer circulate without resolution. They are directed at a single owner who has the evidence and authority to answer.

Giving the AI Compliance Function Authority

One of the most persistent challenges any compliance team faces is a lack of organizational weight. When it comes to AI compliance, without a dedicated officer, the function competes for attention with other priorities, delaying review and essential processes. Moreover, enforcement is inconsistent, and escalation paths remain unclear. Assigning a dedicated AI Compliance Officer, however, signals that the function is a permanent enterprise responsibility, which resonates especially well in the context of regulatory regimes such as the EU AI Act.

The authority and official title enable this AI professional to require participation from other staff members and escalate issues when compliance standards are not being met. Institutional ownership and sanctioning also change how AI Compliance is perceived across the organization. Business leaders recognize that AI usage is subject to review and compliance requirements are treated as obligations rather than guidance. Over time, this shifts AI compliance from an optional exercise into a standard component of governance.

From Policy Statement to Embedded AI Compliance

Many organizations have already taken an important first step by circulating acceptable use policies or internal guidance on responsible AI. These documents help establish intent and signal awareness, but on their own, they do not satisfy compliance expectations nor do they account for unsanctioned AI use or AI functionality embedded within tools already approved for other purposes.

Regulators are clear by now that written policies are only meaningful when they're actively implemented. Consequently, AI compliance must be woven into everyday operational processes. Procurement teams must understand when AI use triggers additional review, and other business units need clear checkpoints before deploying AI-enabled tools. Without visibility into shadow AI usage, these checkpoints are often bypassed entirely.

Vendor onboarding and management, likewise, must account for AI usage embedded within third-party services. Reviews should neither be occasional nor discretionary. Instead, they need to follow a consistent, repeatable process that holds up under scrutiny. The AI Compliance Officer plays a central role in making this transition possible. By moving AI compliance out of static policy documents and into operational workflows, the role ensures governance is proactively practiced and aligned with the regulatory reality.

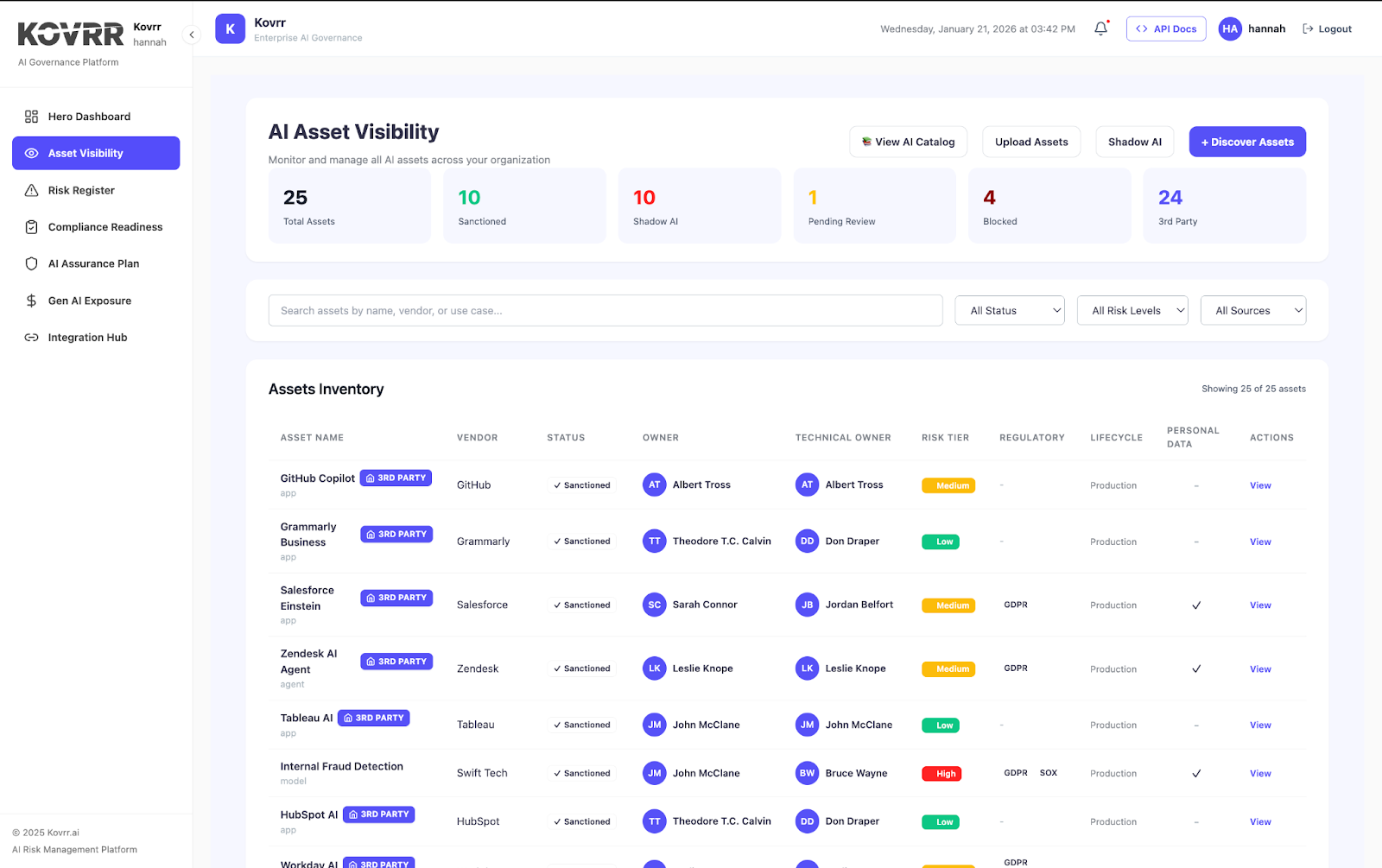

Beginning AI Compliance With AI Asset Visibility

AI compliance cannot function without visibility into where AI is being used. Before policies can be enforced or assessments can be meaningfully conducted, organizations must first understand which AI systems and embedded capabilities exist across the enterprise. Shadow AI has become a persistent challenge in this context. Employees adopt AI tools to improve productivity, vendors introduce AI-enabled features into existing platforms, and business units experiment with new capabilities outside formal approval processes.

In most cases, this activity is not malicious. Rather, it is quicker than the governance structures designed to control it. The result, however, is AI usage that falls outside documented inventories and remains invisible to the teams responsible for oversight. For the AI Compliance Officer, this lack of visibility undermines accountability. Compliance obligations apply regardless of whether AI use is formally sanctioned or centrally tracked. When AI assets are unknown or poorly documented, organizations cannot reliably demonstrate how compliance expectations are being met.

Adopting the proper tools that can uncover this shadow usage and establishing AI asset visibility creates the foundation for institutional ownership. It allows compliance teams to identify where AI is deployed, understand how it is employed, and determine which systems require review under applicable regulations and frameworks. Without this step and without these solutions, downstream activities such as assessments, documentation, and monitoring remain incomplete.

Structured Assessments as a Core AI Compliance Mechanism

Assessments have always been a central element of effective compliance programs, offering a disciplined means for teams to understand their organization's current practices, identify gaps, and demonstrate that obligations are being evaluated systemically rather than informally. In the context of AI, structured assessments become all the more critical due to the pace at which AI systems are adopted and the complexity of the enterprise-level obligations that are attached to them.

An AI compliance readiness assessment allows an organization to evaluate how AI is governed in practice. These evaluations reveal where responsibilities are formally assigned and how oversight is exercised. Some AI compliance assessments, like the one offered by Kovrr, also allow stakeholders to attach relevant documentation and ensure all related evidence is kept current. This ability matters as organizations navigate overlapping requirements from both local and national governments.

Repeatability is equally important, as AI usage is far from static. Compliance programs need a reliable way to reassess governance as systems evolve and use cases expand. Without this repeatability, AI compliance becomes reactive, driven by incidents or inquiries rather than deliberate oversight. Repeatable, structured, SaaS-based assessments thus turn AI compliance from an ad hoc exercise into an ongoing practice that can be sustained as regulatory expectations continue to mature.

The Growing Divide Between Formalized and Ad Hoc AI Compliance

A stark divide is emerging between enterprises that have institutionalized AI compliance and adopted the relevant tools and those that continue to rely on informal, spreadsheet-based arrangements. The former, which also undoubtedly have dedicated AI compliance ownership, are far better positioned to respond to regulatory scrutiny and maintain consistency across business units. Oversight becomes repeatable rather than situational, and compliance programs are continuously reviewed and updated as new regulations emerge.

Ad hoc approaches, while seemingly workable at first, typically falter under pressure. Regular regulatory inquiries or external reviews will quickly expose the gaps in ownership and documentation that typically arise with this outdated strategy. Once that occurs, organizations are compelled to retrofit governance under tight deadlines, often while responding to questions they're not fully prepared to answer. Equipped enterprises are conversely those that have given AI compliance structure, authority, and operational support at scale.

Treating AI Compliance as a Core Enterprise Function

AI compliance can no longer be an optional or developing consideration that organizations manage informally or revisit intermittently. Instead, it must be a durable feature of enterprise governance, shaped by sustained AI adoption and a rapidly expanding body of regulatory expectations across jurisdictions. Obligations will only continue to mature and expand, making the question not a matter of whether AI compliance exists but whether it is structured to function effectively at scale.

The AI Compliance Officer provides a practical answer to that challenge. By establishing clear ownership, embedding compliance practices into operational workflows, and ensuring visibility into how and where AI is actually used, the role gives organizations a defensible foundation for governance. Without visibility, even well-designed compliance programs struggle to account for shadow AI and undocumented usage that sits outside formal processes. With it, assessments, documentation, and oversight can be applied consistently and credibly.

This institutional approach replaces fragmentation with coordination and transforms compliance from a reactive obligation into a managed, ongoing function. Organizations that formalize AI compliance early, supported by asset visibility and repeatable assessment practices, are better positioned to adapt as regulations evolve and AI usage expands. They establish standards that scale and avoid the disruption that often accompanies retrofitting governance under pressure. In the current market, treating AI compliance as a core enterprise function is not a signal of caution, but a clear reflection of readiness and resilience.

Explore how Kovrr helps compliance and GRC teams operationalize AI governance at scale through AI compliance readiness assessments and other SaaS-based tools. Book a demo today.

%20Trends%20-%20Blog%20Image%20(1).jpg)