Blog Post

Advance AI and Cyber Oversight With Kovrr’s Control Assessment

November 17, 2025

TL;DR

- Kovrr’s Control Assessment benchmarks AI and cybersecurity safeguards against frameworks including NIST AI RMF, ISO 42001, NIST CSF, ISO 27001, and CIS, exposing priority gaps and giving organizations visibility into both cyber and AI exposure.

- Configurable scoring, granularity, and answer formats ensure assessments reflect organizational goals, producing results that support practical decisions and highlight progress toward maturity objectives.

- Current and target maturity scores reveal measurable improvement pathways, with ownership assignments and notes fostering accountability and continuous advancement across risk domains.

- Interactive dashboards visualize results through spider charts, heatmaps, and gap summaries, turning technical data into insights executives can act on with confidence.

- Quantification extends assessments into financial terms, linking gaps to forecasted losses across AI and cyber scenarios, enabling defensible governance and cost-effective risk reduction strategies.

Breaking Down Barriers to Strategic Risk Measurement

Conducting a risk assessment has become a baseline requirement, not merely an internal best practice, for building effective GRC programs. Whether their focus is on cybersecurity or the newer frontier of AI, assessments offer a systematic means of illuminating an organization’s current exposure and providing visibility into how safeguards are working across both domains. For many teams, however, beginning the assessment remains a challenge.

In cybersecurity, for instance, the evaluation process is well established but can nevertheless be quite arduous, often requiring extensive data collection and custom scoring methods that take time to develop. With other practices, such as enlisting external consultants to conduct the assessment, it can be costly and difficult to scale. Even when the intent and stakeholder commitment are solid, the practical demands and logistics can stall momentum, leaving evaluation efforts underused or incomplete.

AI assessments, by contrast, are only just emerging. Frameworks like the NIST AI RMF and ISO/IEC 42001 are still new, and organizations are taking them on with little precedent to draw from. While the primary obstacles are not yet as well-documented as in cybersecurity, it’s already clear that without a methodical approach, these evaluations risk becoming equally difficult to manage.

Kovrr’s Control Assessment was designed to remove those barriers, giving security, risk, and GRC teams a standards-aligned means to evaluate both cybersecurity and AI posture. The platform supports multiple frameworks, including NIST CSF, ISO 27001, and CIS for cyber, and NIST AI RMF and ISO 42001 for AI, allowing teams to choose the structure that best fits their organizational priorities.

It was built with speed and practicality in mind, allowing teams to focus less on managing spreadsheets and more on understanding results, identifying maturity gaps, and creating data-driven resilience plans, with visibility into cyber and AI safeguards as the foundation.

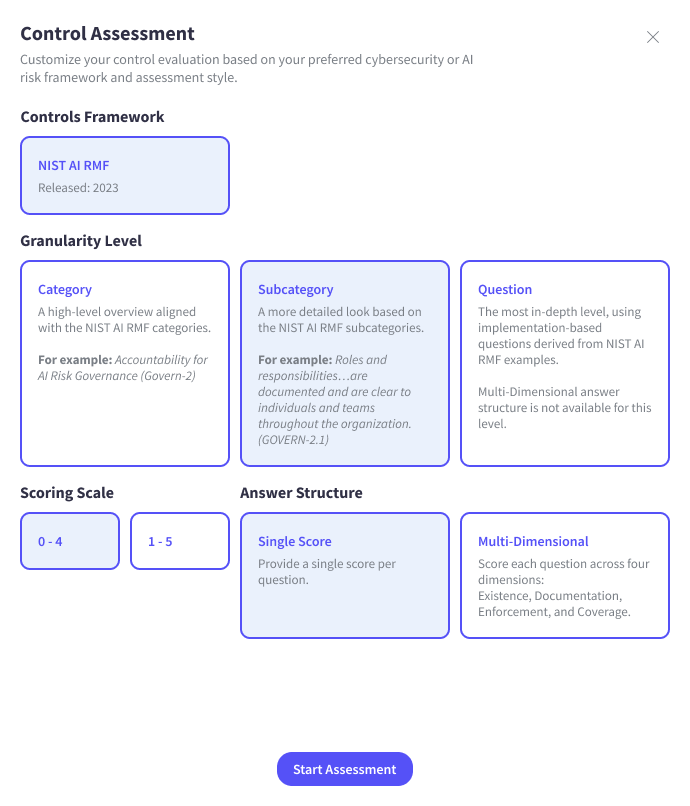

Setting the Parameters for a Tailored Maturity Evaluation

Kovrr's Control Assessment process begins with a configurable setup that lets GRC leaders and SRMs shape the evaluation to fit their organizational needs. From scoring methods to the level of detail included, the tool's flexibility ensures results reflect both current cybersecurity and AI program maturity, while giving leaders visibility into how safeguards are governed and enforced.

As shown in the NIST AI Risk Management Framework (NIST AI RMF) example, users can select their preferred evaluation granularity. A Category-level review maps to framework domains and categories, such as governance or detection. Going one level deeper, Subcategory-level reviews correspond to specific outcome statements within the framework (e.g., “Organizational context is established and understood”).

For those seeking a deeper operational view, the Question-level option breaks down each outcome into implementation-based prompts, asking if policies exist, are documented, and enforced across the organization.

Scoring preferences can also be tailored, and teams can choose between a 0–4 or 1–5 scale, depending on what aligns with internal practices. There's also the option to score each item in a standard way (i.e., one answer per item) or use a more granular model that captures enforcement, documentation, and coverage separately. These choices help to set a useful benchmark that can adapt as maturity grows or business needs change.

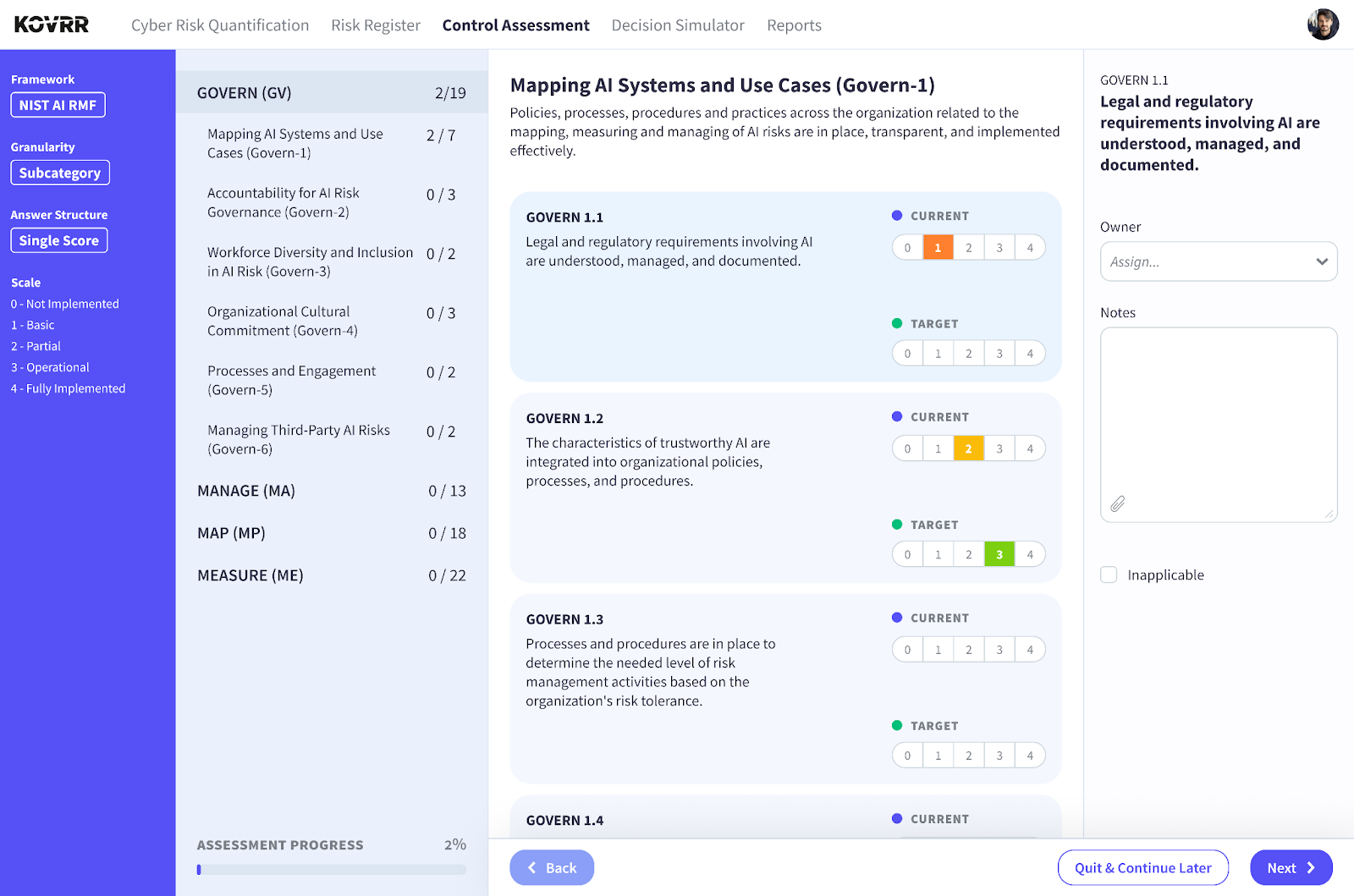

Entering Current and Target Maturity Scores

After the evaluation structure has been configured, users are directed to the maturity input dashboard, where they can enter the current score for the control group, reflecting the organization's present implementation level. They can also enter the target score, representing the organization's desired future state, whether it's a matter of business alignment or compliance benchmarks. The dual input capabilities surface maturity gaps and help teams track ongoing progress.

On top of specifying current and targeted maturity levels, users can assign either individual or team ownership to each control item, fostering accountability, ensuring that plans to achieve target scores are operationalized in a timely manner, and maintaining visibility into cyber and AI exposure as safeguards evolve. Kovrr’s Control Assessment also offers the option to include qualitative notes and documentation, such as known limitations or ongoing initiatives, that may help explain the selected maturity score to internal stakeholders or external auditors.

For those scenarios in which a specific control is not applicable to the company's environment (i.e., it's irrelevant to the industry), users can flag it accordingly, circumventing artificial deflation of final results and ensuring a more accurate analysis. By allowing GRC leaders, SRMs, and CISOs to incorporate both current and target cybersecurity or AI maturity scores, Kovrr’s assessment not only highlights where additional investment is necessary but also establishes a clear roadmap for continuous improvement.

Maturity Gaps Analysis: Visualizing Results and Developing Strategies

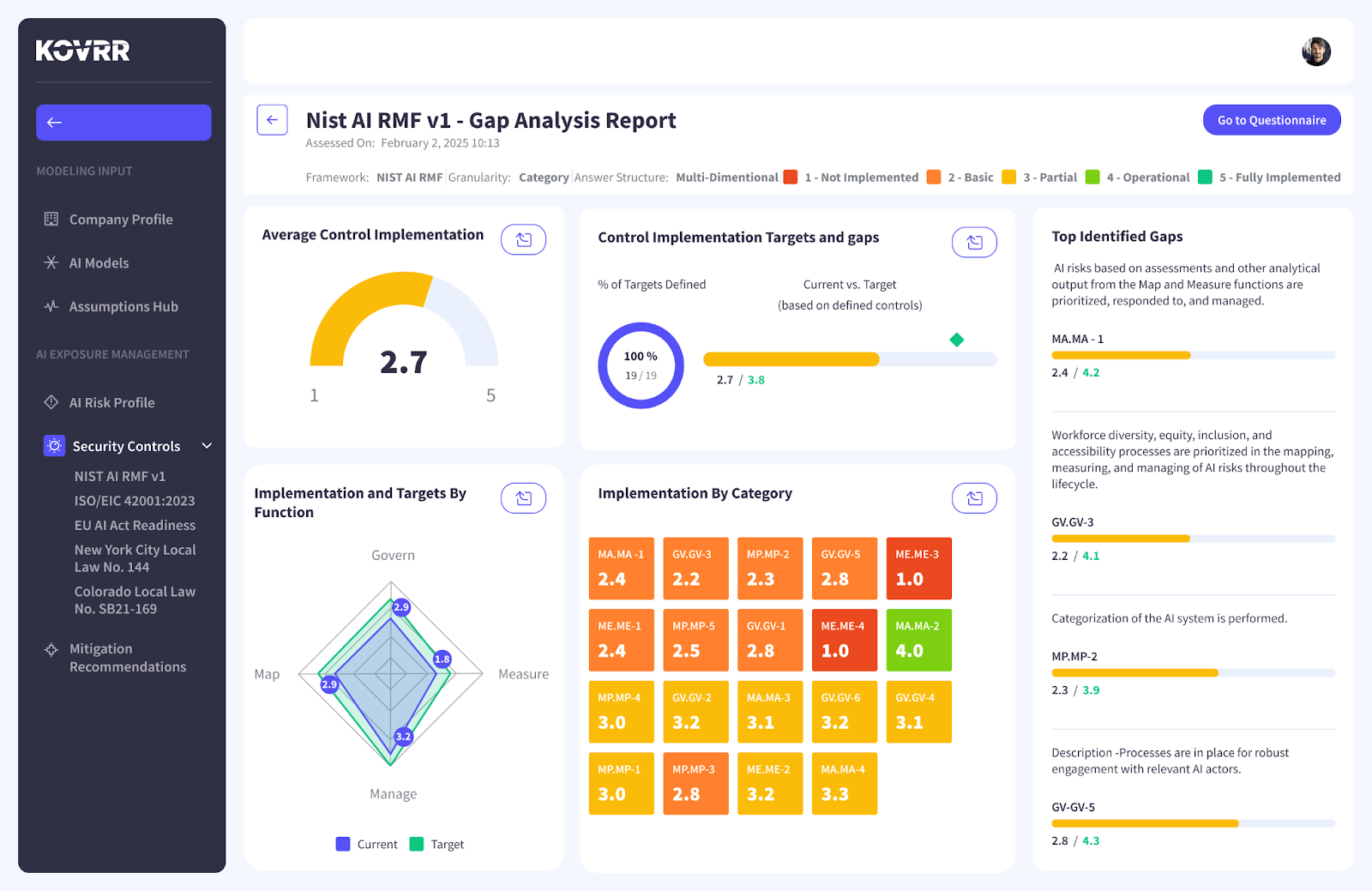

Once the assessment is complete, teams gain access to a comprehensive visual summary of their cybersecurity and AI maturity posture, bringing greater visibility into exposure and making it easier to communicate risk across the enterprise. The results dashboard, also known as the Maturity Gap Analysis, consolidates input data into intuitive charts and tables, illustrating implementation levels and gaps with target scores.

This structured view supports strategic analysis, identifying development needs, thereby helping teams build more resilient programs aligned with GRC goals.

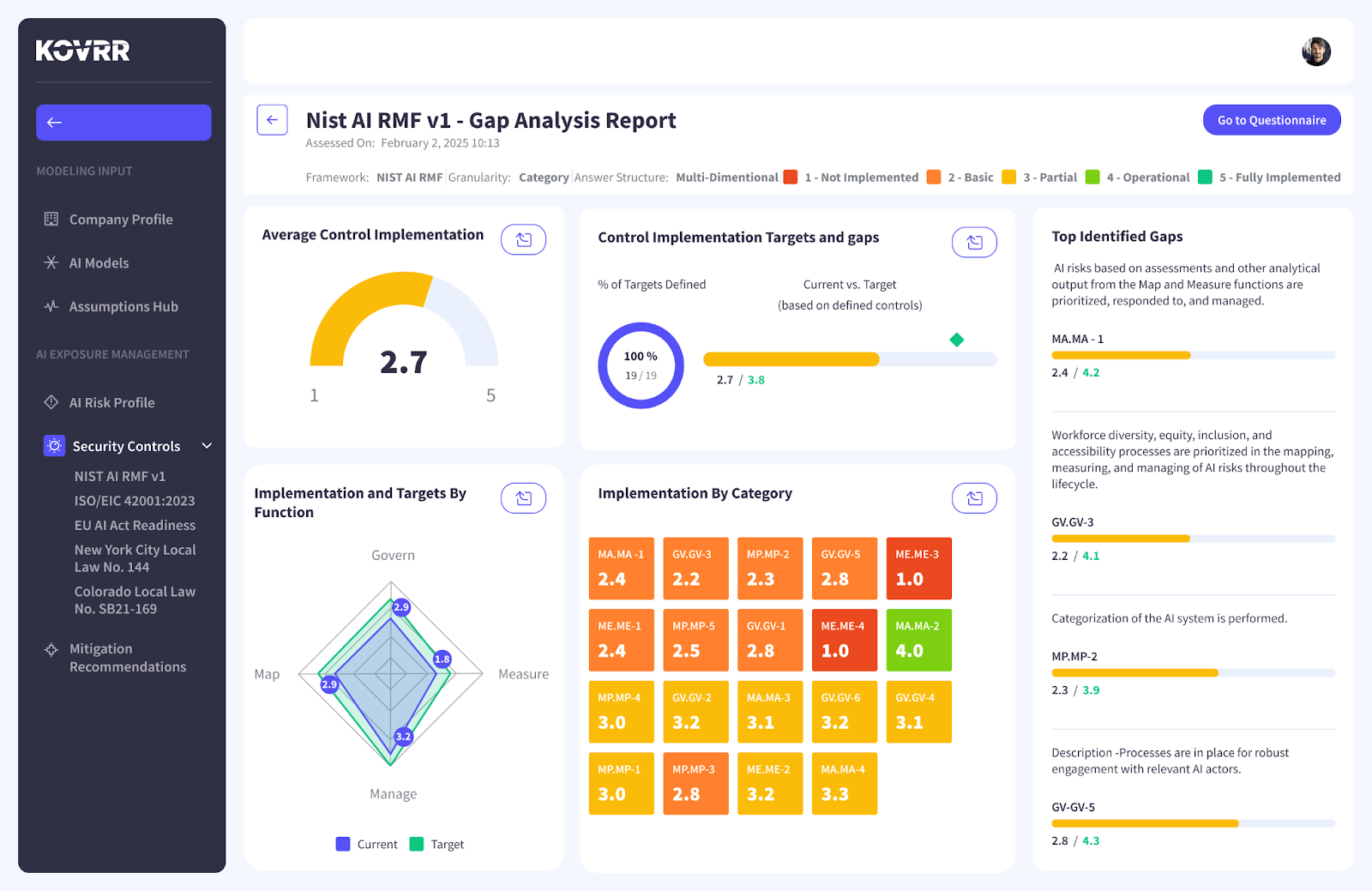

Average Control Implementation

The average implementation score is a gauge, providing a single-number snapshot of an organization's overall cybersecurity or AI maturity based on the average of all current control scores. While high-level, this metric is useful for establishing a baseline at the beginning of an assessment initiative, allowing leaders to more easily communicate with executives and board members and serving as a competitive marker in future evaluations.

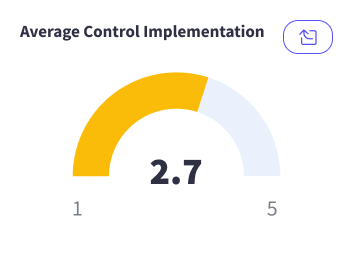

Control Implementation Targets and Gaps

The control implementation targets and gaps view highlights two key metrics: how many cybersecurity or AI control items have target scores defined (in the visualization above, 89 percent) and the average difference between the current and target maturity levels. In tandem, these metrics provide a quick indication of assessment completeness and program ambition. The gap metrics specifically give teams a clear view of how much effort is required to achieve their goals.

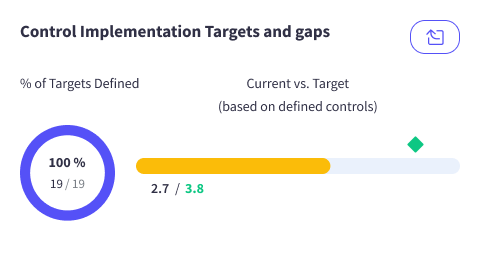

Implementation and Targets by Function

The implementation and targets by function spider chart maps the organization’s current and target maturity scores across the NIST AI RMF’s four core functions (Govern, Map, Measure, and Manage). The contrast between the current (blue) and target (green) allows teams to visualize which functions are most aligned with cybersecurity or AI risk management goals and which require additional investment. The chart is helpful for demonstrating imbalance across functions and informing potential resource allocation decisions.

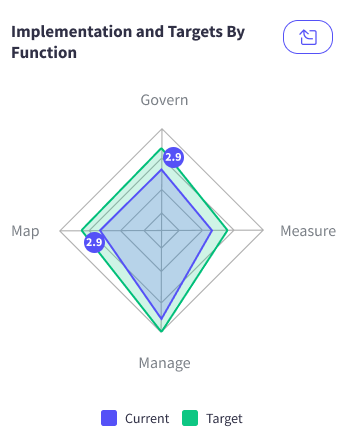

Implementation by Category

The heatmap-style view of implementation levels presents the organization’s average implementation scores across all relevant categories of the selected AI or cybersecurity framework, highlighting relative strengths, gaps, and where visibility into exposure matters most.

In the example above, categories align with the 19 defined in the NIST AI RMF, distributed across its four core functions. Each tile represents a specific control group and includes a corresponding score indicating how well the company is performing in that area. Higher scores, in green, indicate stronger maturity, while lower scores, in red and orange, signify capabilities needing attention.

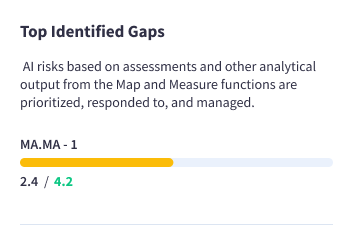

Top Identified Gaps

The top identified gaps section illuminates the framework control areas with the most substantial implementation shortfalls, relative to the GRC team’s targets. Each item includes the AI or cybersecurity framework reference, a control description, the organization’s current and target scores, and the calculated gap as a percentage. For instance, in the example shown above, which uses the NIST AI RMF, the Manage function (e.g., Prioritization, Response and Management of AI Risks) shows a significant gap, revealing that requirements have not yet been fully implemented despite being designated as a priority area.

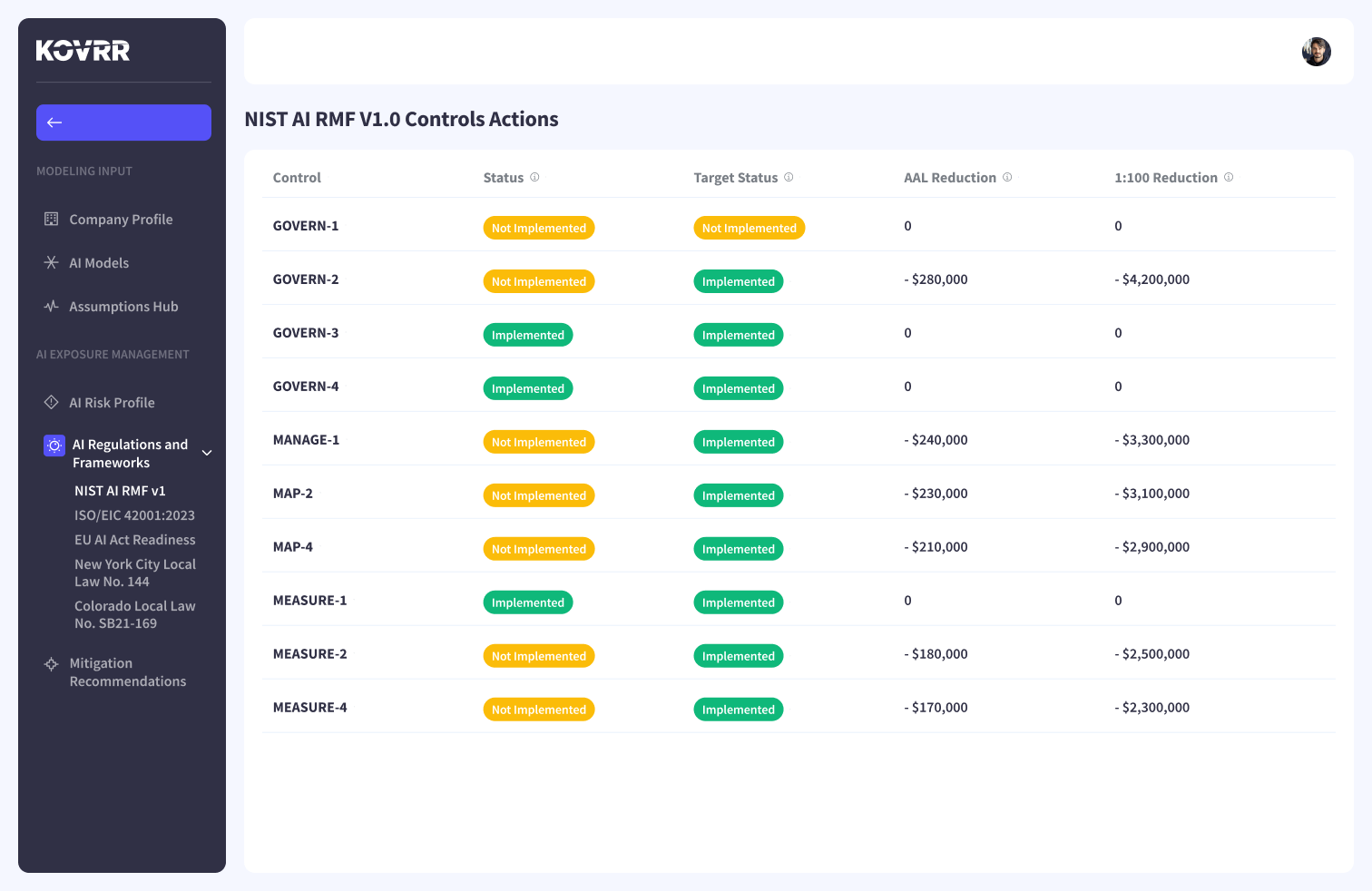

Quantifying the Gaps

Control assessments can deliver an unmatched level of transparency and visibility into both AI and cyber exposure, giving SRMs and GRC teams a clear standard on which to measure progress. However, the insights fall short when attempting to gauge the impact associated with certain gaps. A control deficiency, even when precisely calculated, doesn't indicate how much potential financial loss the company faces or which gaps ultimately pose the greatest threat.

Enhancing results with financial quantification, on the other hand, provides teams with this information, allowing them to assess which low-maturity areas carry the most significant monetary implications and, subsequently, prioritize remediation efforts based on these potential loss forecasts rather than other, more subjective factors. Faced with limited budgets and the need to optimize resources, this perspective of AI or cyber risk exposure is vital.

Whether harnessing cyber risk quantification (CRQ) or AI risk quantification, the principle is the same, with control scores becoming inputs for modeled scenarios that forecast financial and operational outcomes. For cyber, this may involve linking control shortfalls to loss events like ransomware or data breaches. For AI, it may mean mapping weaknesses against scenarios such as adversarial manipulation or data leakage. In both cases, quantification ensures maturity results move beyond static scores and provide a defensible view of risk in financial terms.

Furthermore, in translating technical, context-specific findings into objective, business-relevant metrics, quantified control assessments make it easier to communicate risk and secure leadership buy-in. Indeed, coupling maturity scoring with quantification solutions, like the one offered by Kovrr, enables stakeholders to build a more actionable, data-driven roadmap that supports smarter investment decisions.

Driving Data-Backed Governance and Risk Management Decisions

Control assessments are now a core element of modern GRC programs, giving security and risk leaders a clear framework to understand where their defenses stand, providing visibility into AI and cyber exposure, and showing how to strengthen them. Still, even with the best intentions, the execution often breaks down in practice. Overly complicated scoring models or dependencies on executives with limited bandwidth can make the process harder than necessary, reducing follow-through and value.

Kovrr’s Control Assessment tool addresses these challenges, among others, by providing a structured, streamlined approach to analyzing current AI and cyber risk management capabilities. It simplifies the way organizations evaluate their posture and highlights the areas where progress is most needed.

When quantified, however, these insights become even more actionable, transforming scores and control gaps into financial implications that can guide the decision-making process. Ultimately, this added layer of intelligence empowers organizations to move from generalized improvements to precision-targeted mitigation actions that are anchored in measurable impact.

Start Kovrr’s Control Assessment to benchmark AI and cybersecurity program maturity, gain visibility into exposure, and build a data-driven mitigation strategy.

.webp)