Blog Post

AI Risk Management: Defining, Measuring, & Mitigating the Risks of AI

November 12, 2025

TL;DR

- AI is transforming business operations at scale while risks evolve just as quickly, requiring structured methods to manage exposure and safeguard enterprise value.

- Breaking AI risk into categories, such as operational and privacy, helps organizations understand different dimensions of exposure, even when those risks overlap.

- A widening oversight gap exists as organizations adopt GenAI without adequate safeguards, often lacking governance policies to prevent shadow AI proliferation.

- AI risk assessments provide visibility into where AI is used, the maturity of existing safeguards, and create a baseline for structured, defensible risk reduction.

- AI risk quantification uses modeling techniques to forecast financial and operational impact, producing outputs that inform more resilient decision-making frameworks.

- The added benefits of quantification include stronger investment prioritization, improved board-level communication, informed governance decisions, and optimized insurance strategies for GenAI-related risks.

Managing AI Risk Is a Business Imperative

Artificial intelligence (AI) used to be something that only existed in science fiction novels and dystopian movies. Then, technology advanced, and it became a reality, being slowly implemented into experimental projects and niche use cases. Now, however, it is shaping real business outcomes, accelerating decisions and automating processes in ways that are becoming commonplace in daily market operations.

But while the benefits of using GenAI in the workflow are immense and multiplying, so too are the risks, and many of them are emerging faster than stakeholders can fully understand or address. A single weakness in an AI system, for instance, can severely affect the organization in a myriad of ways, not least of which can be the compromise of millions of highly sensitive data records. Consequences can easily cascade into regulatory violations and lasting reputational damage as well.

These outcomes can feel unpredictable, but the reality is they are not, and gaining visibility into where and how AI operates within the organization is the first step toward gaining control over the risks it introduces. The sooner organizations adopt a structured approach to identifying, measuring and managing AI risk, the better positioned they will be to leverage these life-changing tools. Proactive AI risk management secures AI’s role as a sustainable business asset.

What Is AI Risk?

Enterprise-level AI risk is the potential of GenAI or any other type of artificial intelligence system to cause losses for an organization or its stakeholders, and can originate from various sources, which is why it’s often broken down into distinct types. These AI risk categories help illuminate the nature of each risk, along with the conditions that make it more likely to occur and the precise ways in which it might leave an impact.

To put this in perspective, the U.S. Department of Homeland Security highlights three foundational ways AI can introduce risk:

- The use of AI to enhance, plan, or scale physical or cyberattacks on critical infrastructure.

- Targeted attacks on GenAI systems themselves, especially those supporting critical operations.

- Failures in the design or implementation of AI tools, leading to malfunctions or unintended consequences that disrupt essential services.

Those examples capture the broad risks at a national level, but within organizations, the picture is even more layered. In practice, a single AI-related incident usually spans multiple types at once, compounding the consequences and making the situation even more difficult to manage. Still, by understanding these different types of AI risk, stakeholders can more easily build a robust, comprehensive risk management strategy.

AI Risk Type 1: Cybersecurity Risk

Cybersecurity risk in AI is the one stakeholders are generally most familiar with, and refers to the possibility that data or critical systems are compromised through digital means. For instance, attackers may exploit vulnerabilities in public-facing AI applications, thus gaining unauthorized access into the organization and the power to manipulate outputs in ways that erode trust.

Managing this aspect of AI risk involves securing the AI supply chain and ensuring visibility into vulnerabilities so that AI systems are protected with the same rigor as other critical assets.

AI Risk Type 2: Operational Risk

Operational risk is the disruption caused by AI-related vulnerabilities that interfere with an organization’s ability to function as intended. AI operational risks can stem from technical faults in models or flawed system integration. Often, these operational problems will arise gradually as AI systems slowly drift away from minimal performance standards without being detected. Effective management requires actions such as continuous monitoring and, more importantly, documented maintenance accountability.

AI Risk Type 3: Bias & Ethical Risk

Bias and ethical risk in GenAI arise when systems produce outcomes that are misaligned with established workplace and societal standards. Problems can originate from skewed or incomplete training data or disagreements about how AI is applied. When materialized, the impact of this risk factor can be severe, ranging from legal exposure to internal cultural harm and the breakdown of employee trust.

Addressing bias and ethical issues demands visibility into how training data is collected and applied, along with heavy scrutiny of sources and incorporating ethical review into every stage of AI development.

AI Risk Type 4: Privacy Risk

Privacy risk in AI concerns the misuse of or unauthorized access to classified information. This mishandling usually occurs when models are trained on data that contains identifiable details and when GenAI tools interact with unsecured data sources. In some cases, even anonymized data can be re-identified through correlation with other datasets, making these risks all the more ominous. Managing the privacy component of AI thus requires that cyber GRC teams enforce strict data governance and maintain control over training and inference data.

AI Risk Type 5: Regulatory & Compliance Risk

Regulatory and compliance risk in AI proliferates when systems or their subsequent usage fail to meet legal or industry requirements. The consequences of non-compliance may include fines or operational restrictions, along with reputational damage that can limit future market opportunities. Mitigating these types of AI risks involves integrating regulatory awareness into AI strategy and making certain that compliance is treated as an ongoing operational priority rather than a one-time requirement.

AI Risk Type 6: Reputational & Business Risk

Reputational and business risk in AI derives from actions or outcomes that weaken the organization’s position in the market. Indeed, public trust can erode quickly if AI-driven decisions are perceived as biased or unsafe, or poorly controlled, and in many situations, the damage extends to long-term brand identity, making recovery costly and slow. Accounting for this risk requires both transparency from businesses regarding how AI is used and an explicit commitment to aligning AI initiatives with internal policies and publicized core values.

AI Risk Type 7: Societal & Existential Risk

Finally, societal and existential risk in AI involves impacts that extend externally. These types of risks include large-scale job displacement, erosion of democratic processes through AI-enabled misinformation, or, at the more extreme end, those science fiction scenarios in which AI poses a direct threat to human survival or the continuity of civilization. Addressing this category of risk calls for industry collaboration and demands that regulators enact legislation that helps to ensure, as much as it can, that AI development aligns with the broader public interest.

MITRE ATLAS: Mapping How AI Risks Unfold

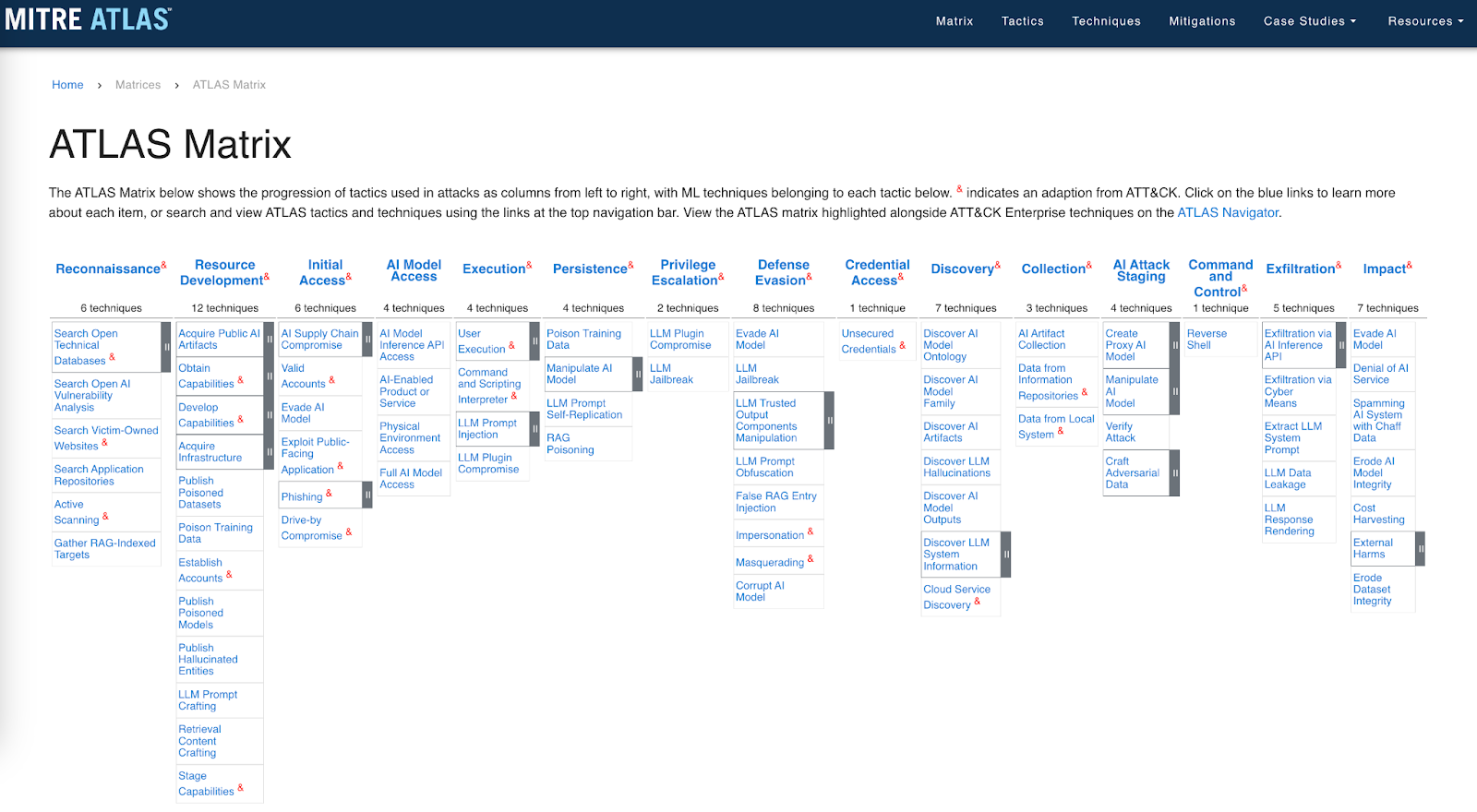

The seven categories of AI risk describe where exposure exists. But to help security and risk managers understand how those risks may play in the organizational setting, the MITRE Corporation published the Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) framework. Much like the MITRE ATT&CK framework has become the standard reference for conventional cyberattacks, the MITRE ATLAS provides a structured map of how adversaries exploit GenAI systems in the wild.

Specifically, it outlines the tactics and techniques used across the full lifecycle of an AI-related incident, such as gaining initial access through a supply chain compromise or phishing, to persisting by poisoning training data, and ultimately disrupting or exfiltrating critical AI assets. In doing so, ATLAS connects abstract risks such as cybersecurity or operational failure to concrete adversary behavior, offering risk management teams a means of proactively determining how AI-specific incidents might unfold and prioritizing safeguards accordingly.

Why AI Risk Management Must Be Implemented Formally

AI is rapidly merging into the DNA of the modern workplace environment, much in the same way that computers did in the mid-1980s. New systems are being deployed and, in many situations, are being fed sensitive data without adequate safeguards in place. Essentially, there is a widening oversight gap that governance, risk, and compliance (GRC) teams cannot span fast enough, leaving businesses and their customers exposed to unknown, unprecedented danger.

In fact, the latest research report from IBM and the Ponemon Institute found that 97 percent of all organizations hit by an AI-related security challenge lacked basic access controls. Another 63 percent had not established governance policies for AI usage at all, creating ample room for shadow AI to spread and accumulate risk that remained largely invisible until they caused significant disruptions.

That lack of governance and AI risk management structure proved costly, with the global average cost of a data breach in 2025 standing at $4.4 million. Although the same report determined that companies using GenAI extensively in their security operations can reduce that figure by an average of $1.9 million, those savings depend on disciplined oversight. Without proper controls in place, the same technology that can lower costs can just as easily amplify the damage.

Additionally, the urgency for more comprehensive, standardized AI GRC programs is being amplified by legislative pressure. The European Union's AI Act, for instance, sets a baseline as the first regulation on AI by a major regulator, mandating an extensive set of binding requirements for how AI is assessed, monitored, and controlled. Organizations that embed risk management into the AI adoption process from the outset will be able to both meet these obligations and minimize AI-related losses, while those who wait may face the consequences.

What Is an AI Risk Assessment?

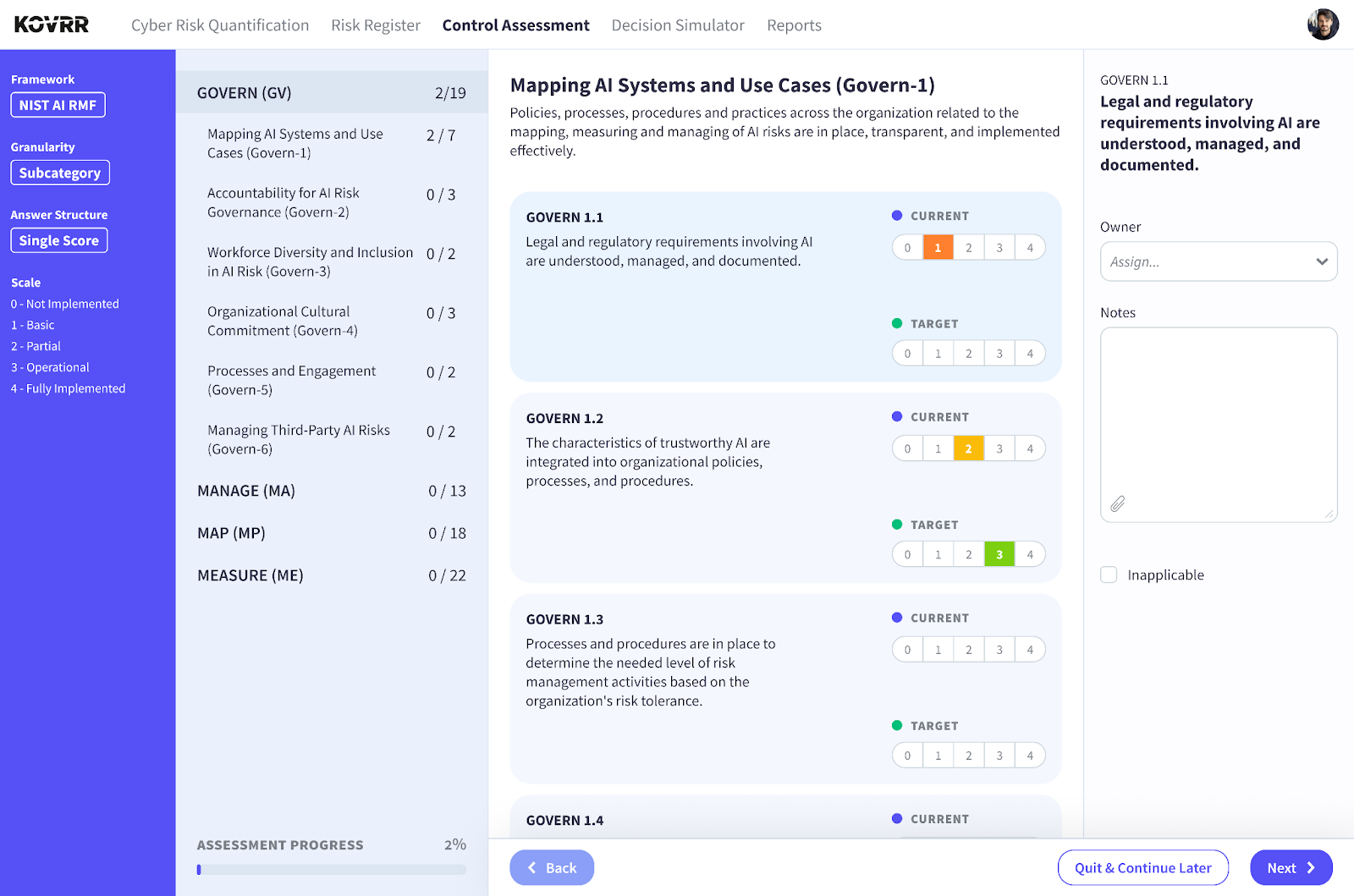

The first step in managing any business risk, be it AI, cyber, or otherwise, is to systematically discern the organization's current level of exposure, taking into account any of the safeguards and policies already in place to minimize it. An AI risk assessment is the structured process that provides a measure of visibility, allowing stakeholders to thoroughly examine how AI is being used across the enterprise and evaluate whether existing controls are sufficient to a degree that aligns with risk appetite.

More than analyzing technical safeguards, though, a comprehensive risk assessment will also review critical safety and resilience factors such as governance structures, data management practices, model monitoring procedures, and incident response readiness. A competent assessor will also use the opportunity to uncover situations in which GenAI is being used outside of approved channels, thereby creating a major and potentially expensive source of hidden risk.

Even though AI risk is still an emerging area, recognized standards bodies have already developed frameworks to guide assessment. The NIST AI Risk Management Framework (AMF) and ISO 42001, for example, are already being employed by leading enterprises to evaluate AI usage in a consistent, defensible way. By mapping both the strengths and weaknesses, an AI risk assessment provides stakeholders with a solid foundation for building focused, effective risk reduction plans.

Benefits of Conducting an AI Risk Assessment

Risk assessments should not merely be employed to demonstrate that an evaluation has taken place. When treated as an opportunity to uncover valuable exposure insights, they actually have the potential to deliver concrete results that guide decision-making and bolster measurable improvements. Outputs should include an inventory that creates visibility into where and how GenAI is used in the organization and a rundown of current safeguard maturity vis-à-vis a recognized AI risk management framework.

Even more beneficial is to pair these present control levels with a defined target state that reflects the organization's risk appetite and regulatory obligations. The best AI risk assessments will offer a means for GRC teams to easily identify these gaps, set priorities regarding which areas they should invest in, and track progress over time. Taken together, this information can be transformed into an action plan that improves resilience and ensures AI use remains aligned with business objectives.

In the end, an AI risk assessment will be much more than a record of compliance. It will become a defensible account of due diligence and a road for building a targeted risk reduction plan. It also establishes a repeatable process for reassessing risk as GenAI systems evolve, ensuring that governance and safeguards adapt in step with innovation and creating a solid baseline for advancing toward more sophisticated capabilities such as AI risk quantification.

What Is AI Risk Quantification?

When guided by a clear objective, the completion of an AI risk assessment will illuminate several critical metrics defining the organization's exposure, most notably the gaps between current and target control levels, that help inform governance decisions and strengthen accountability. What those gaps and other insights do not reveal, however, is how control levels affect the likelihood of exploitation or the scale of potential losses.

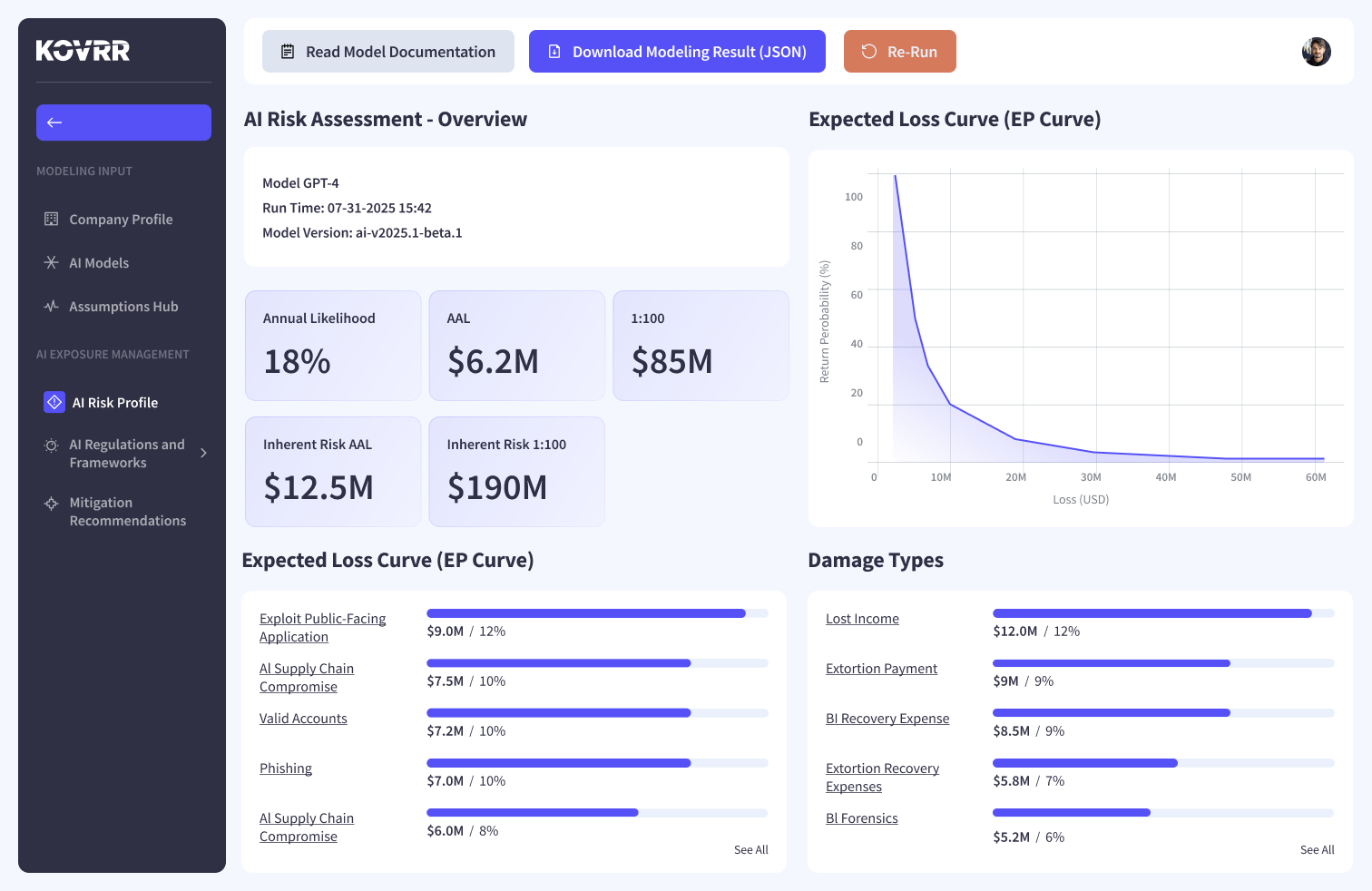

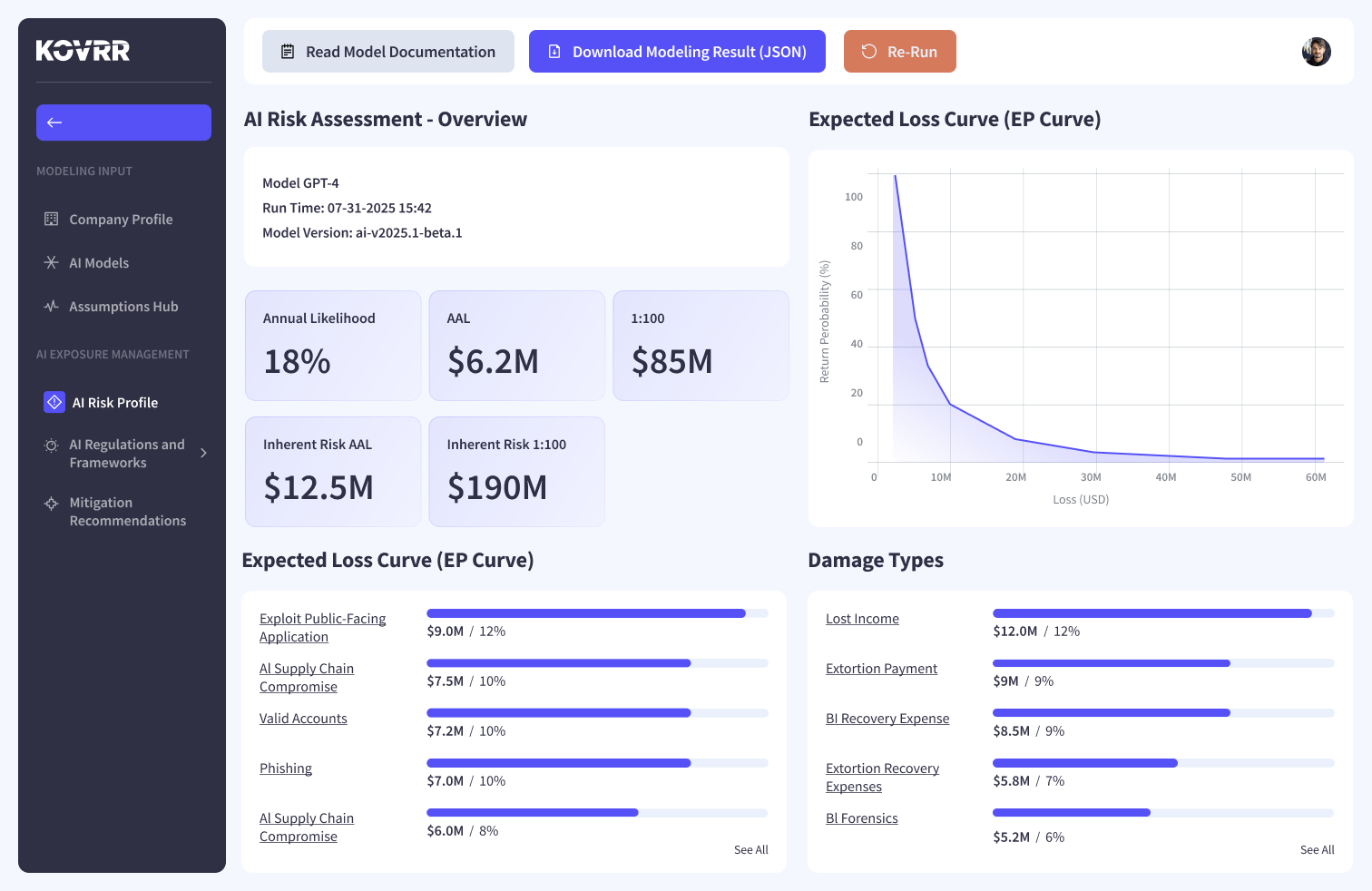

Obtaining these more concrete definitions of exposure requires the next step of quantification. AI risk quantification is the practice of modeling AI-related threats in order to forecast how they are likely to affect the business over a defined period, typically the year ahead. The process begins with the models ingesting both internal and external data, such as AI risk assessment outputs, incident records, firmographics, and industry-specific threat intelligence.

This foundational information is then used to build a bespoke catalog of potential AI-related events, custom-tailored to the organization's profile to ensure the modeled scenarios are realistic and relevant rather than generic. Next, advanced statistical modeling techniques are conducted, such as the Monte Carlo simulation. The model runs thousands of iterations of the upcoming year, each one representing how AI-related risks could unfold and affect the business under varying conditions.

The outputs distill these simulations into measurable results, most prominently expressed through a loss exceedance curve (LEC). This curve displays the full range of possible outcomes, from routine incidents to extreme, low-probability events, along with the likelihood of losses exceeding different thresholds. From there, the data can be segmented further to uncover more granular insights, equipping stakeholders to make more informed, defensible decisions about managing AI risk.

The Added Benefits of AI Risk Quantification

With AI risk quantification, exposure is translated into practical, financial terms that can be incorporated into other decision-making frameworks. Once AI risk ceases to be an abstract concept and becomes a factor that can be weighed against investment and governance priorities, AI risk management itself shifts from a narrow control exercise to an integral part of enterprise planning, shaping how the organization prepares for future uncertainty.

Investment Prioritization and ROI

Quantification provides GRC leaders with the ability to objectively evaluate which AI-related risks hold the greatest potential threat to the organization and which safeguards produce the most significant reduction in modeled losses, turning investment planning and resource allocation into a data-driven process. Teams are set up to prioritize the initiatives that deliver the highest return in reduced exposure and highlight when the financial benefit of governance and security controls outweighs their cost.

Executive and Board Communication

When AI risk is quantified, it becomes an understandable business risk that resonates at the executive and board level. Rather than discussing control gaps in technical terms or score averages, GRC leaders can present the likelihood of AI risk events occurring, along with the potential cost of defined scenarios. This more familiar language creates a common ground for discussions, helping to elevate AI risk as a strategic concern and embed it into broader corporate decision-making processes.

Governance and Capital Planning

AI risk quantification helps leadership set risk appetite and tolerance levels that accurately account for the organization's unique exposure. With the modeled distributions of possible loss outcomes, decision-makers can determine whether to strengthen oversight, increase capital reserves, or expand investment in AI risk management programs. In this way, quantification delivers the evidence base for governance choices that balance risk appetite with high-level enterprise aims.

Insurance Optimization

While fully standalone AI insurance policies exist, they are still rare. Most organizations today address AI risk through add-ons to existing policies. In either case, AI risk quantification provides the visibility needed to understand how AI risk exposure interacts with coverage, highlighting potential gaps in terms and conditions and strengthening renewal negotiations. Presenting a quantified view of AI exposure also positions enterprises more favorably with underwriters, supporting better agreements and ensuring policies reflect the real scale of AI-related risk.

Building Resilience Through Strategic AI Risk Management

GenAI is revolutionary, reshaping business at a remarkable pace. The issue is that its AI risks are evolving just as quickly, and organizations that push forward without examining those risks closely and systematically will often end up paying a significant price. A wiser course of action is to treat AI the same way other enterprise risks are treated, leveraging structured assessments and quantification.

Control assessments create visibility into where AI is being used and whether protections match expectations, while quantification builds on that foundation, forecasting how different scenarios could play out financially and operationally. Combined, these practices make AI risk something that GRC leaders can manage rather than something they merely have to endure.

AI risk quantification in particular allows stakeholders to approach governance and investment with data-driven evidence instead of guesswork, and to view AI adoption as both the opportunity and responsibility that it is. Companies that start adopting this approach now will be far better positioned to withstand disruption and build confidence as GenAI becomes inseparable from business operations and strategy.

To explore how your organization can approach AI risk management with greater structure and foresight, schedule a free demo with our team and learn more about our AI risk offerings.