Blog Post

AI Risk Visibility: The Foundation of Responsible AI Governance

November 26, 2025

TL;DR

- AI visibility has become a prerequisite for responsible governance, yet many organizations still operate without understanding their AI assets or true exposure.

- The absence of visibility leaves oversight reactive and fragmented, making governance programs ineffective and disconnected from daily AI use.

- Gaining AI visibility means identifying where AI assets operate, how safeguards perform, and where risks accumulate across business functions.

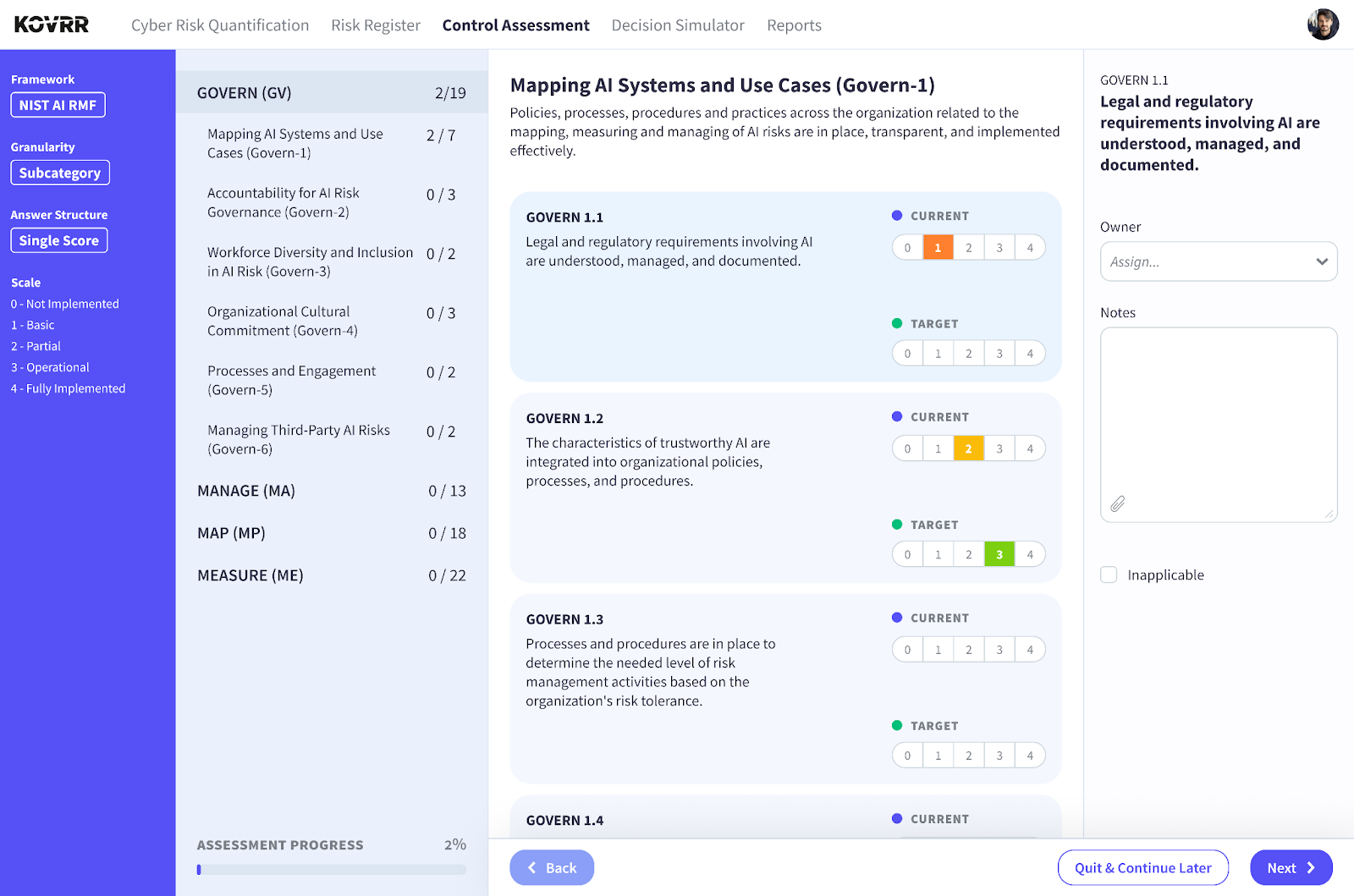

- Structured assessments based on frameworks like NIST AI RMF and ISO 42001 help evaluate control maturity and uncover critical weaknesses.

- Quantification builds on those assessments, translating AI exposure into financial and operational impact that supports informed, defensible decision-making.

- Sustained visibility evolves into strategic awareness, enabling organizations to anticipate disruption, adapt governance, and strengthen resilience as AI reliance deepens.

The Rising Importance of AI Visibility

General-purpose AI (GenAI) and other artificial intelligence (AI) systems are now completely embedded within business processes across the market. The once purely imagined technology is significantly influencing operations and reshaping the very processes under which high-level decisions are made. Unfortunately, each new implementation and use case of AI comes with an unprecedented level of threat exposure, and most enterprises have still not gained the ability to see from where these risks originate or how they accumulate.

The absence of visibility into AI assets leaves governance efforts adrift. Programs designed to establish safeguards and policies may look rigorous initially, but without having that direct line of sight into how AI systems are being deployed and the data they're being fed, security and risk managers will ultimately fail at addressing the conditions that are actually driving exposure to the greatest extent. What emerges instead is a stark disconnect between documented oversight (or lack thereof) and day-to-day usage, making risk management inconsistent and undermining accountability.

Adopting mechanisms and tools that provide AI visibility, such as identifying where AI assets are in use and evaluating the maturity of existing controls, is critical in today’s AI-centered business environment. Visibility in this sense is a practical capability, not an abstract concept, enabling governance, risk, and compliance (GRC) teams to benchmark safeguards against recognized frameworks, prepare for regulatory expectations, and focus resources on the measures that have the greatest impact on building resilience.

The Current Gap In AI Oversight

Compared with other technological advances that took years to become fully embedded, AI has moved into enterprise operations at a remarkable speed. Consequently, governance practices have not been able to keep up. In fact, research from IBM and the Ponemon Institute found that, as of 2025, 63% of organizations had not established any GRC program for AI usage at all. This lack of structure has left risk managers struggling to even establish a comprehensive baseline view of where AI assets exist and how they are being used.

One of the primary reasons oversight has fallen so far behind is due to the way in which AI enters the business. Although some deployments are officially sanctioned and tied to strategic initiatives, many others appear informally, introduced by individual teams or business units without coordination. The pace and inconsistency of these deployments make governance highly fragmented, with safeguards applied unevenly, if at all, and accountability often undefined.

Regulatory demands likewise amplify the work organizations must take on to close the governance gap. The EU AI Act, for instance, requires systematic evaluation of how AI is deployed and safeguarded, and more countries, including Canada and the UK, are preparing their own legislation.

Without visibility into AI assets, enterprises cannot benchmark controls, verify compliance, or identify which weaknesses pose the greatest threat. Oversight that defaults to reactionary practices leaves organizations exposed to regulator and stakeholder scrutiny, and, worse, losses that could have been prevented.

What Is AI Visibility?

AI visibility is an iterative process that allows organizational stakeholders to understand how GenAI and other AI assets are woven into their operations and where that usage creates exposure. Through this approach, which is made all the more efficient with software tools that add structure and analytical insight, enterprises gain the foundational information necessary for then minimizing that risk in a consistent, defensible manner. It is the first step toward oversight; without it, governance has no anchor.

This process spans several dimensions, including:

- AI asset discovery and inventory building: Stakeholders identify and catalog AI assets across the enterprise, whether formally or informally introduced by teams and employees. This process establishes the foundation for a comprehensive AI asset inventory document, detailing how these systems interact with business functions and the type of information they are ingesting.

- AI safeguard maturity: Stakeholders assess the strengths of existing AI risk controls and measure their effectiveness in practice. Frameworks such as the NIST AI RMF and ISO 42001 help guide this evaluation. Typically, target control maturity levels will also be identified to mark the beginning of a roadmap.

- AI exposure analysis: Stakeholders examine how deployments and safeguards interact to generate measurable AI risk exposure. The results provide a view of both the likelihood and the impact of different scenarios, giving GRC and risk managers a basis for prioritizing mitigation efforts and building cost-effective resilience programs.

Together, these dimensions transform scattered observations into a cohesive perspective of AI assets and their usage. Then, with that view firmly established, leaders gain a solid starting point for building AI governance programs. As Peter Drucker observed, “you can’t manage what you don’t measure,” and the same principle applies here. The structured visibility moves AI away from being an opaque source of risk to something assessable and ultimately mitigatable.

Why AI Visibility Is Essential for Governance and Resilience

Although AI visibility provides foundational, detailed insights into where GenAI systems are in place and how they're being leveraged, it also, more critically, helps to establish the conditions under which governance, compliance, and long-term resilience strategies can be built. With an absence of visibility, AI oversight becomes a reactive practice. Resources would inevitably be lost, and broader business plans would likewise rest on shaky ground, developed without a full picture of how AI assets affect risk and performance.

With visibility established, however, leaders gain a vantage point they cannot achieve through policy documents or compliance checklists alone. In addition to allowing them to craft more effective programs, they'll also be equipped to communicate AI's implications to boards and other executives, harnessing robust evidence instead of dotted assumptions. Relevant stakeholders can then collaborate to ensure that resources are directed toward exposures that could create the most significant damage, be it financial, operational, or otherwise.

AI visibility similarly strengthens an organization's standing under external scrutiny, as regulators and auditors increasingly expect demonstrable proof that AI risks are systematically being attended to. With adequate visibility-gaining solutions and processes in place, enterprises can show that resilience strategies are not merely aspirational but executable, reinforcing compliance requirements and giving shareholders confidence in the organization’s ability to withstand disruption.

How AI Risk Assessments Deliver Visibility

AI risk assessments, such as the one offered by Kovrr, are the practical mechanism through which organizations achieve visibility into their AI assets at scale. They provide a structured lens for risk managers to evaluate safeguards, benchmark maturity levels, and identify the control gaps that leave the organization exposed. Unlike ad hoc reviews, SaaS-based assessments allow GRC leaders to apply a methodology that can be repeated across business units, ensuring results are comparable and aggregatable.

Typically, a risk assessment will align with recognized frameworks or standards, which, in the case of AI, include the NIST AI RMF and ISO 42001. The assessment measures the effectiveness of controls explicitly outlined in these standards and, thus, allows stakeholders to craft a plan that would strengthen AI governance and minimize risk to the greatest extent.

For example, the control Govern 1.6 of the NIST AI RMF calls for organizations to maintain a comprehensive inventory of AI assets and systems. When an assessment benchmarks an organization’s current practices against this control, gaps such as undocumented applications or shadow deployments become visible immediately, allowing remediation efforts to be targeted and sequenced effectively.

Moreover, when continuously conducted, assessments will also provide valuable insights that extend beyond that of an individual evaluation, potentially highlighting systemic weaknesses, such as recurring lapses in maintaining inventories across regions, that require enterprise-level attention. With the patterns surfaced, leaders can determine which weaknesses warrant immediate attention and, where necessary, make accountability explicit by assigning ownership to those issues.

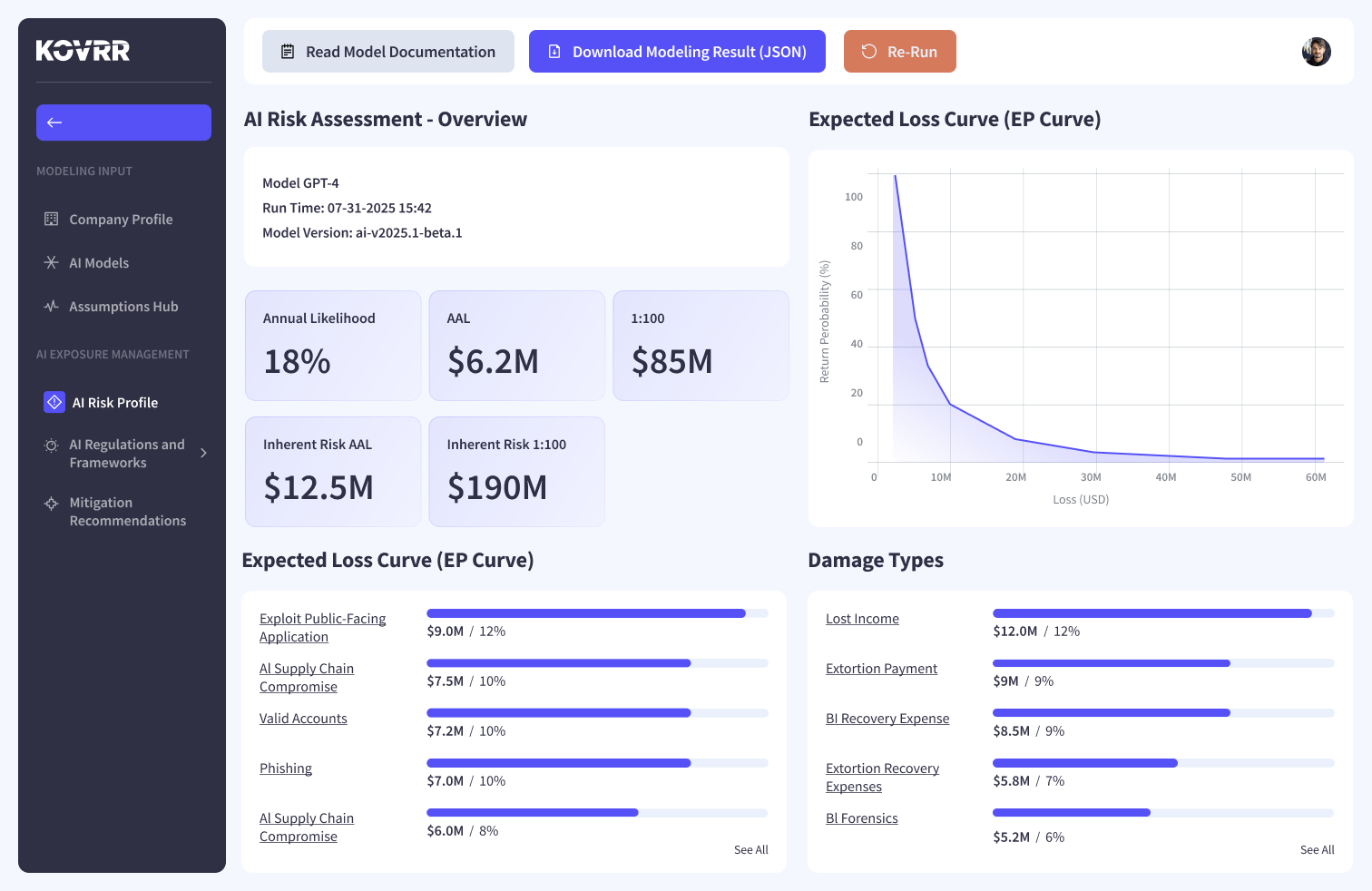

How Quantification Elevates AI Visibility Into Impact

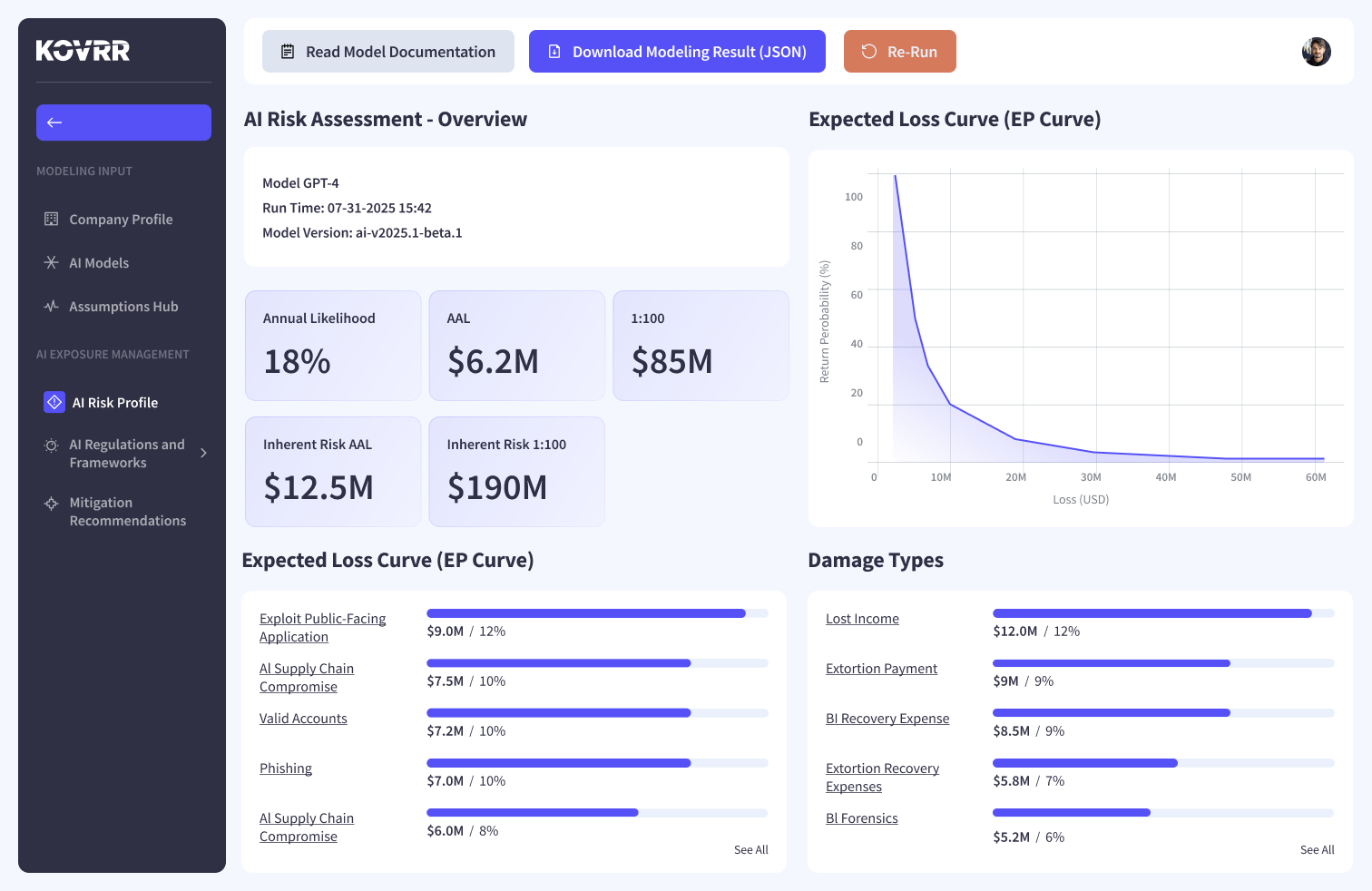

While assessments provide a structured view of where AI assets are used and how safeguards are performing, quantification extends that visibility into measurable, tangible terms. Quantification models incorporate assessment results to forecast both the likelihood and severity of AI-related incidents. Ensuing outputs include higher-level metrics such as the annualized loss expectancy, along with more granular details such as the breakdown of damage types per scenario, providing insights needed to design accurate mitigation strategies.

Beyond being of use to AI management and GRC teams, though, the quantification of AI visibility offers boards and executives actionable information. In contrast to abstract maturity scores, leadership can review evidence expressed in monetary terms, enabling clearer prioritization of investments and stronger justification for resource allocation. The quantification extends the field of visibility across the entire organization, making AI risk understandable even to non-technical stakeholders.

Finally, quantification also introduces foresight. By modeling a range of possible scenarios, including low-frequency, high-impact events, organizations can stress-test their safeguards and prepare for disruptions before they materialize. This forward-looking view moves AI oversight from reactive adjustments to anticipatory planning, strengthening the enterprise’s capacity to withstand unexpected shocks.

Sustaining Governance Through Continuous Visibility

AI visibility has rapidly become a prerequisite for responsible governance, which may explain why so many organizations have yet to achieve it. Oversight structures can't be built, let alone function, when leaders lack a robust understanding of where AI operates, what data it interacts with, and how safeguards are performing. A structured AI visibility mechanism bridges that divide, grounding oversight in evidence and turning governance into a practical exercise.

Those organizations that are effective in minimizing AI exposure, thus, won't be those with the most comprehensive policies and practices, but those with the most informed, targeted ones. Visibility creates that intelligence, revealing how AI assets intersect with business functions and which ensuing risks are material enough to demand attention. With scalable AI visibility tools, such as SaaS-based risk assessments and AI risk quantification, security and risk managers can ensure that their governance is built on measurable insight.

Once that visibility is sustained, it evolves into strategic awareness. Leaders can anticipate disruption and guide their organizations with a sharper understanding of how AI assets affect performance and resilience. Over time, this awareness shapes a governance model that grows alongside AI implementation itself, capable of adapting as systems advance, regulations mature, and reliance deepens across the enterprise.

Kovrr’s AI Risk Assessment and Quantification modules were developed to support this level of visibility, helping enterprises translate understanding into action as their portfolio of AI assets expands.

Schedule a demo today to see how these capabilities can strengthen your organization’s governance and resilience.

.webp)